GSoC/2020/StatusReports/KartikRamesh

Digikam : Face Management Workflow Improvements

DigiKam is a KDE Desktop Application for Photo Management. Apart from the standard functionality of being able to view photos, DigiKam provides the user with a lot of added features such as Image Tagging, Photo Editing, Image Metadata viewing/editing. At the heart of DigiKam's commendable functionality is the FaceEngine. DigiKam can detect faces in Photos, and recognize faces in new photos based on prior information. This allows for a great personalized experience for the user.

A major breakthrough in the FaceEngine came last year when Thanh Trung Dinh implemented OpenCV's DNN module to bring great improvements to performance. Igor Antropov implemented many changes to the workflow Interface, to make the overall experience much comfortable for the user.

This project is in essence an extension to the work that Igor did last summer. As such, this project does not intend to implement one Major feature. Instead, it aims to rectify issues in the current workflow, as well as introduce new features in an effort to improve the user experience.

Mentors : Gilles Caulier, Maik Qualmann, Thanh Trung Dinh

Important Links

Project Proposal

DigiKam Face Engine Workflow Improvements

GitLab development branch

Project Goals

This project aims to :

- Provide a Help Box to aid first time users of Facial Recognition.

- Provide notification about results of a Facial Recognition.

- Order People Sidebar, to show tags of Priority first.

- Order Face Item View, to display Unconfirmed Faces before Confirmed Faces.

- Provide new “Ignored” Category for Face Tags.

- Automatically Group Results in Unconfirmed Tag.

- Provide Functionality to reject Face Suggestions.

- Automatically add Icons to newly created face tags.

Work Report

Week 1 : May 11 to May 18

NOTE: Due to the current global situation, there's some uncertainty regarding when my college final term exams will be scheduled. After having discussed this with the mentors, we've decided that starting early with the project is the best course of action.

The first issue I intend to tackle is that of Rejecting Face Suggestions in DigiKam. Face Suggestions are a key part of the Facial Recognition process, and allow the User to categorize their album according to People Identities, while training the Facial Recognition algorithm.

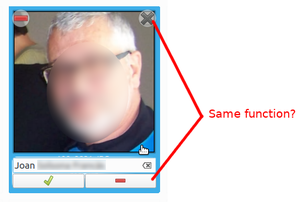

The user interacts with these Facial Rejections by means of the Assign Name Overlay. This overlay appears on hovering over the Face Suggestion and allows the User to confirm (✅) or reject(⛔) the suggestion.

However, in the present version there's really no way to "Reject" a Face Suggestion. Pressing the ⛔ does exactly what pressing ✖ does, it deletes the Face Region from the Database.

This is not ideal. The ⛔ button should technically do the opposite of what ✅ does. It should be the user's way of telling the Facial Recognition Algorithm that it's incorrect.

Present Scenario

- Facial Recognition outputs incorrect suggestion.

- User intuitively presses ⛔, hoping the algorithm realizes the mistake.

- Instead the Face is deleted from the Database.

- To recover the Face, the User re-runs Face Detection and Face Recognition.

- Since the Algorithm was not provided any inputs, it repeats the Incorrect Suggestion.

Desired Scenario

- Facial Recognition outputs incorrect suggestion.

- User intuitively presses ⛔, hoping the algorithm realizes the mistake.

- Face gets moved to "Unknown" Tag.

- If user re-runs Face Recognition immediately, in all likelihood the mistake will be repeated, as it will perform again on identical conditions.

- If user provides some input, in the form of assigning Faces to other photos of the same person, then the mistake will be reconciled.

Implementation

The Confirmation/Rejection happens through an interplay between 3 classes.

- AssignNameWidget : The actual widget which controls the ✅, ⛔ buttons, LineEdit etc.

- AssignNameOverlay : The overlay which controls how it's drawn when user hovers over a face etc. It includes the AssignNameWidget as a member variable.

- DigiKamItemView : Responsible for the main view of DigiKam.

The current workflow is as follows:

- The user presses on the ⛔ button.

- AssignNameWidget sends a reject signal to AssignNameOverlay.

- AssignNameOverlay sends a reject signal to DigiKamItemView.

- DigiKamItemView calls appropriate methods of FaceTagsEditor to delete this face.

The issue is that the Rejection Overlay (✖ Button) is also connect to the same DigiKamItemView::removeFaces(), hence the identical functionality.

I started off with connecting the Reject Signal of AssignNameOverlay to a new function DigiKamItemView::rejectFaces(). The implementation for this function came to be after a discussion with the developers on the mailing list.

In essence, I'm treating Rejection of Face Suggestion as a change of Tag from the current Unconfirmed tag, to an Unknown Tag. To do this I've implemented FaceTagsEditor::changeTags().

Results

Here's how Rejection of Face Suggestions now works:

First I proceed to Reject the Face Suggestion. Notice how this leads to an increment of the count of Unknown Faces, and the Face does show up in the Unknown Category. Hence the Face is not being deleted!

Next, I demonstrate the deletion of face using the (✖) button. This does not change the Unknown Counter, as the Face has been deleted.

Related Links

Week 2 : May 18 to May 25

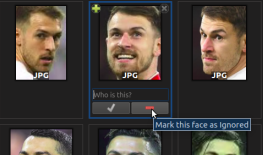

This week I focused on implementing Ignored Tags in DigiKam. This category is one that has been requested by users multiple times.

DigiKam will often detect and then try to recognize faces in photos that the user perhaps doesn’t recognize himself, or doesn't wish to identify. Such faces will continue to reappear during Face Detection and Recognition, and the user has to keep rejecting the suggestions (using the implementation of last week) every time.

In the past, users have solved this problem by creating a placeholder tag, and assigning all such faces to that tag. Instead, it would be a much greater solution, if DigiKam provided such a placeholder tag. That way we may implement customized logic and functionality for this tag. I've chosen to call this placeholder tag as Ignored.

With the implementation of this new feature, the user could just mark unnecessary faces as Ignored. Faces marked as Ignored will not be detected by the Face Detection process in the future, nor will they be considered during the recognition process.

Implementation Details

Logic behind Implementation and Current Status

Currently, marking of a Face as Ignored is only allowed for Unknown Faces. This implementation was considered after a conversation on the mailing list. This is based on the logic that Confirmed Faces include Faces that the User recognizes. Since they are confirmed, they won't reappear during the Face Detection/Recognition runs. Hence, the problem described in the introduction doesn't arise for Confirmed Faces.

It's important to understand the distinction between a Face and a Person. A person represents a unique identity, who may have several faces in the Photograph collection. The current implementation ignores faces and NOT persons.

For eg. consider a person Jack with 10 photos of him. If the user marks the 4th face of Jack (faces will be created from each photo during Detection) as Ignored, then during subsequent recognitions this Face will not be re-detected or recognized as any Person. However, the Algorithm may try to identify any of the other 9 faces.

The Ignored Implementation may be made Identity Specific, by modifying the underlying Face Recognition algorithm, to take into account similar of a current face (that is trying to be recognized), to Faces marked as Ignored. If high similarity is found, the face won't be recognized. However, this is something for the future, and not something I'll implement in the current project.

1. Allow marking of Faces as Ignored

The Unknown Faces overlay has been modified, so that the Reject Button ⛔ corresponds to marking the Face as Ignored.

This assignment does not clash against the functionality of Reject Suggestions last week, because Suggestions appear only on Unconfirmed Faces. Hence, pressing ⛔ on Unknown Faces as of last week did nothing.

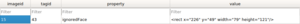

2. Implement Ignored Tags Category in the Database

The next step is to ensure that pressing the ⛔ button on Unknown Faces actually leads to marking the face as Ignored. Instead of opting for a "cheap fix" and just confirming the Face as a Person tag with the name "Ignored", I opted to implement changes in the core level. This separation of Ignored Tag from the standard Confirmed Tag, will allow us to implement custom features for Ignored Tags in the future.

After the implementation, the categories now available to the user are:

- Confirmed Tag

- Unconfirmed Tag

- Unknown Tag

- Ignored Tag

Implementing Ignored Tag as a separate new category in the database was relatively easy as I could follow the blueprint laid out by Unconfirmed and Unknown Tags. Ignored Tag resembles these tags in many aspects. Here are some important commits related to this implementation:

One important thing to take care of is that ignoredPersonTagId() will create the Ignored Tag if one doesn't exist already. This implementation was opted for, to be in accordance with Unconfirmed and Unknown Tags. The issue with this is that it isn't ideal for the Ignored Tag to be automatically created, when the User doesn't want it to.

Since Ignored Tag is not a feature that will be required by most users, it's appropriate to only display it as and when the User requires it. This will help de-clutter the User Interface.

As such unlike Unconfirmed/Unknown Tags, the Ignored Tag isn't created on startup. Developers will also need to ensure that the tag doesn't get created when the user doesn't want to. For eg. if trying to implement a custom functionality for Ignored tag in a particular method (say sorting implementation of the People Sidebar), one would :

if (tagId == FaceTags::ignoredPersonTagId())

{

// custom implementation

}

However this would create the Ignored Tag if it didn't exist. To solve this I've provided FaceTags::existsIgnoredPerson() which may used to check whether an Ignored Tag has been created by the user.

3. Mark the Face as Ignored

Ignoring a Face can be treated as a Change of Tag, where the initially assigned Unknown Tag of a Face is changed to the Ignored Tag. Hence, I made changes to the implementations of changeTag that I had suggested last week. Here are some commits regarding the same:

With this implementation, Ignored persons are stored in the database as:

4. Listing Ignored Faces

Now this problem was something I struggled with for quite some time. Even though I was able to mark Faces as Ignored, I couldn't get them to show up in the Digikam Item View. I suspected this was because the Database did not understand how to display this new category. Indeed that was the case. The Faces that show up for a particular tag (when you click on a name) is activated by an ItemLister job, responsible for providing the ItemView with the ItemInfos for all faces that belong to the tag. All I had to do was add Ignored to the list of Properties that the lister considered. Here's the commit for the same.

5. Metadata support for Ignored Faces

Gilles suggested that it would be a good idea to store information about Ignored faces in the metadata of images. This way, if the user accidentally deleted the database, the information about Faces can be easily recovered. DigiKam provides the option to users to store information about Face Tags, all that needed to be done was plug a similar functionality for Ignored faces.

Here are my commits for this feature: Include Ignored Faces in MetaData

Week 3 : May 25 to June 1

This week I focused on Reordering the People Sidebar. The People Sidebar is an integral part of Face Workflow, and allows the Users to interact with People tags. Currently the People Sidebar is displayed as a plain list, which can make it really difficult for the Users to identify which Tags need attention. During Facial Recognition, an important part of the whole process is to confirm/reject suggestions that the Algorithm provides, so that all (or most) faces are identified. If the number of People Tags in the Database are huge, then this can be very troublesome, as the Tags with new suggestions appear mixed between the tags with no suggestions. The fact that all tags appear in the same font style is also not very helpful for the user.

Here's a video depicting the current status:

Notice how I have to search for the tags that require attention. I aim to improve this using 2 methods: 1. Display People Tags that need attention at the top of the List. 2. Display People Tags that need attention in a different Font Style (or weight).

Implementation Details

1. People Tags of Priority to appear in Bold

To implement this I added a new ItemRole to the Album Model, for Font style. This Role will return Bold for Tags that have Unconfirmed Faces, and Default otherwise. Here are my commits for this feature:

1. Add new ItemRole to AlbumModel

2. Implementation for fontRoleData, which provides a QFont based on the logic mentioned above.

2. People Tags of Priority to appear at the Top

The tags in People Sidebar are displayed using a TagModel, which is sorted using a TagPropertiesFilterModel. This class inherits from AlbumFilterModel, and I modified the lessThan() method of this class, which is responsible for comparing and sorting the Tags. The new sort rule first sorts Tags by the number of Unconfirmed Faces associated with it, and then alphabetically for all Tags that have no Unconfirmed Faces.

Another important part was to enable auto-sorting of the People Sidebar. We are implementing our sorting based on the count of Unconfirmed Faces in each tag, however this may change as the User interacts with each image (whether Confirm or Reject). Hence, I trigger an sorting of the view, whenever the user modifies a face.

Here are my commits regarding the same:

Modify AlbumFilterModel::lessThan()

Enable auto-sort of People Sidebar

Final Results

All said and done, here's how the new People Sidebar looks!

You may notice that the Sidebar gets re-ordered as I confirm Faces.

Even though this feature wasn't as tough to implement as the other ones, I'm positive that it would be really improve the User Experience.

Week 4: June 1 - June 8

This week I implemented Automatic Addition/Removal of Tag Icons. DigiKam provides users with the option to assign Icons to Tags, to allow easy visibility of these tags. For Face Tags in particular, Users may assign a Face associated with that Tag as the Tag Icon. However, in the current implementation, most users don't make use of the Tag Icon assignment. This is because the process involves 2 steps:

- Confirm a face to a tag (which may lead to the creation of a new tag)

- Manually assign the face as the Tag Icon.

This can be annoying for the User if there are a large number of Tags.

These two processes can be easily automated, so that whenever a new Tag is created (as a consequence of Face Confirmation), then the Face is automatically assigned as the Tag Icon. A similar process can be implemented in the reverse process, that is if the User deletes the last Face associated with the Tag, then the Tag Icon should be deleted.

Implementation Details

1. Automatic Assignment of Tag Icons

People Tags are defined as Tag Albums (TAlbums) in the DigiKam database, as such there already exist methods for assignment of Album Icons (updateTAlbumIcon) provided by the AlbumManager class.

Confirming and Rejecting faces ultimately happens at the Database level, which led to a problem. Functions of AlbumManager can only be used in files that are compiled with AlbumManager (gui_digikam_obj), and since Database functions are pretty low level, they aren't compiled with AlbumManager.

To get around this problem, I included Automatic Icon Assignment in higher-level classes (such as FaceUtils), and made use of QTimers to ensure that the lower level Database functions (sometimes in different threads), were completed before trying to assign a tag.

Here are my commits regarding the same:

2. Automatic Removal of Tag Icons

In case the User accidentally confirms a Face, this would lead to automatic assignment of the wrong face to a tag. Hence it's important to enable Automatic Removal of Tag Icons, as it would allow re-Assignment of a different face to the Tag.

If the face just deleted (or rejected) was the final face associated with a tag, then the Tag Icon associated should be removed. Here the role of QTimer becomes even more important, as you should check the number of Faces associated with the Tag, only when all the Core Database functionality have been completed.

Faces can be removed from a Tag in 2 ways.

- Delete the Face Region (using the ✖)

- Remove the Face Tag (using the context menu for each Face)

I patched tag removal for both of these cases in the following commits:

- Automatic Removal when Face is Deleted (FaceUtils)

- Automatic Removal using Context Menu (ItemIconView)

Final Results

All said and done, here's how the feature looks!

In the video I demonstrate 4 kinds of Automatic Icon functionality:

- Icon Assignment to a newly created Tag.

- Icon Assignment to an existing Tag with no Icon.

- Icon Removal when Face Region is deleted.

- Icon Removal when Face Tag is deleted.

Future Improvements

- Since, this is a new change, most of the current Users of DigiKam would have Tags with No tag Icons. It would be helpful if we could provide an option to the Users that would update Tag Icons for all Tags without Icons.

Week 5,6: June 8 to June 22

This week I focused on Sorting the Face View so that Unconfirmed Faces show before Confirmed Faces. In the current implementation the results of the Facial Recognition (i.e. Unconfirmed Faces) appear mixed between Confirmed Results. This forces the User to search around for Unconfirmed Faces. For a large database of Images, this can be very unpleasant.

Instead of this, we may implement a new Sorting order, which takes into consideration the number of Unconfirmed Faces in an image. Faces with higher number of Unconfirmed Faces will be given preference. This would lead to all Unconfirmed Faces appearing together in the view, before all other Confirmed Faces.

Implementation Details

1. Method to get number of Unconfirmed Faces in an Image

The view that we intend to sort is the ItemIconView, which stores ItemInfos as its Model Index. Hence, we need to be able to compute the Unconfirmed Faces given an ItemInfo. To do this I implemented unconfirmedNameFaceTagsIface(uFTIF) in FaceTagsEditor. The count of the list returned from this method, will be used for the sorting comparisons.

2. Implement storing of Unconfirmed Face Count in the ItemInfo Cache

Since uFTIF can take in an ItemInfo and return the count of Unconfirmed Faces, my first intuition was to just call this method repeatedly in the sorting algorithm. However, as Maik (one of my mentors) pointed out, this was incredibly inefficient.

uFTIF makes calls to the database to get the properties stored in each Face being considered. Since the number of comparisons made will be of O(N2), this can be really slow. When you consider that the aim of this feature is to improve User Experience, particularly for large image folders, this is undesirable.

For this very purpose, DigiKam provides the ItemInfo class, which stores information about Items in its "cache", and only makes Database Read operations, when necessary. Hence, I implemented new fields to store information about the count of Unconfirmed Faces in each Image.

Here are my commits regarding the same:

- Addition of Unconfirmed Face Count to ItemInfoData

- Implementation for unconfirmedFaceCount()

- setUnconfirmedFaceCount()

3. Dynamic Updates of UnconfirmedFaceCount during Confirm/Reject

Since the sorting is being done on a property that can change when the User interacts with the Faces, it's important for the Confirm/Reject methods to update the count of Unconfirmed Faces in the associated ItemInfo. To do this, I modified the implementations, to accept an ItemInfo parameter, which will be modified if needed.

Here are my commits regarding the same:

- Implementation to Update Count when Face is Rejected.

- Implementation to Update Count when Face is Confirmed.

In both these cases, the count will be decremented. I don't need to explicitly set the count when an Unconfirmed Face is first detected. This is because the counts will be set when the first initial database read operation is carried out in the ItemInfo. Subequently, the User operations can only decrement the count. Counts are incremented only by the Facial Recognition process.

4. Implement new Sorting Role based on UnconfirmedFaceCount

To allow usage of the new ItemInfo methods, I implemented a new sort Role SortByFaces, which Users can access through the Menu, whenever they wish to Sort Images by Unconfirmed Faces.

Here are my commits regarding the same:

5. Unconfirmed Face Count not being updated [BUG]

If the User runs Facial Recognition while currently in the view of a Tag, then occasionally the Face Results for that Tag might not appear in the correct order. As mentioned in "Analysis of Database Read Operations" below, the Database is Read only when the User switches between tags. This leads to an issue if the User is already in the Tag, and Unconfirmed Results show up.

To solve this issue, I manually increment the Unconfirmed Face Counter for the ItemInfo, during the Face Recognition process. Information is still being cached, however I'm ensuring that every Unconfirmed Face will show the correct information.

Here's my commit regarding the same:

Results

Here's how the User can sort images in the new implementation.

Analysis of Database Read operations

Here's another video with the terminal output as well, to show how many times the FaceTagsEditor method is called.

As you can see, the number of calls to FaceTagsEditor is very few. Note that the total number of Images in my Database is over 100. The read operation is done only when you switch to a new Tag. User can confirm/reject Faces and this would modify the Unconfirmed Face Count, and sort dynamically without needing to access the Database.

Week 7, 8: June 22 to July 5

This week I created a Help Box for Face Management. This help-box was designed with the Users in mind, to allow an easy understanding of how to use Face Detection and Recognition.

DigiKam's Face Workflow might be slightly complicated for a new user to understand. At an overview, a new user needs to perform the following steps, to be able to use Face Recognition:

- Run Face Detection on the albums that the user wishes to recognize.

- Provide some identities to the faces detected.

- Run Face Recognition on the detected faces.

The confusion is mostly caused by the distinction between Face Detection and Face Recognition. Detection means knowing that this is a face, Recognition means knowing "who" that face is.

Hence, to explain to the new users how to get started with the Workflow, as well as explain to intermediate users some of the new features, I set down to design the help-box.

Implementation Details

1. Triggering a Notification for first-time Users

The Face Workflow is accessible through the People Sidebar. DigiKam already allows triggering in-application notifications. All that needed to be done, was to send a notification if someone opens the People Sidebar for the first time.

Here are my commits regarding the same:

- Display popup if first time visiting the People Sidebar

- firstVisit keeps track of the visit made to People Sidebar

Currently, I'm using a private variable (firstVisit) to keep track of visit made to the People Sidebar. However, this will be reset each time the app is restarted. I'll make changes so that the information is written in the config files.

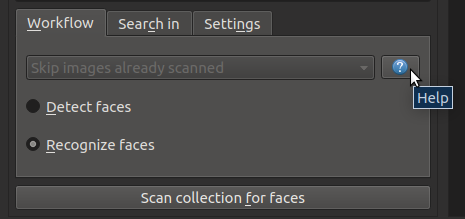

2. Button to trigger the help-box Dialog

The next step was to provide a mechanism for the User to access the help-box. I had two options here:

- Place the button as a Menu Action.

- Provide an actual button in the View.

I went for the second option, as it provides more visibility, and is easy to access. I placed the button in the People Sidebar Face Scan panel.

3. Designing the Help Box

The Help-Box itself is a QDialog with embedded QTabWidget. I've inserted images and GIFs along with the text within the help-box in order to make it easier for the User to understand. Here's how the help box looks!

Week 9, 10: July 6 to July 20

This week I worked on implementing ‘’’Grouping of Unconfirmed Faces’’’ in the Face View. By default, all the Face Suggestions shows up mixed between other Suggestions. It would be much cleaner, if there was some grouping order, so that similar Face Suggestions appeared near one another.

A couple weeks back I did implement a Sorting Role to Sort Faces by Unconfirmed Faces. However, that only distinguished between Confirmed and Unconfirmed Faces. The problem in this case, was to group within Unconfirmed Faces.

My initial idea was to use a similarity threshold between faces, and then implement groups (in the literal sense) where each group contained faces of high level of similarity towards one another. However this would have been both tough to implement and clumsy to look at, because tens of Face Suggestions would get clustered as one.

Maik suggested that instead of literally grouping Faces, I instead implement a Categorization Order to categorize faces based on the name suggested to them by the Face Recognition algorithm. This was a neat approach because:

- DigiKam already provides Categorization.

- I could make use of the existing information from Face Recognition.

Different Faces that were suggested the same name, are bound to have similarity to one another. Hence, the desired goal will be achieved.

Implementation Details

1. Implementation to find Suggested Names for a given Image

Categorization happens on the Model Indexes, which don’t understand what faces are. Models do have information about ItemInfos however. Hence, the first step was to be able to get the Information about Suggested Names in an image, given the image ID.

I implemented FaceTagsEditor::getSuggestedNames for this purpose. This function takes in an Image Id, and returns a Map of Tag Regions to the Suggested Names for each Tag Region.

Here are the commits regarding the same:

2. Caching Suggested Names in ItemInfo

FaceTagsEditor methods involve reading the database, which can be time-consuming. To improve performance, I implemented caching of this information in the ItemInfo class. This has two advantages:

- No repeated calls to the database.

- Can obtain suggested names from ItemInfo class.

One important thing to realize is that ItemInfo represents the entire Image, whereas Faces represent only a subset of the Image. As a result, a single ItemInfo (i.e. Image) may contain Faces. This is why I’ve chosen to implement a Map to store all (TagRegion, SuggestedName) pairs.

Here are my commits for the same:

- ItemInfo::getSuggestedNames()

- Add FaceSuggestions member to ItemInfo

- Add FaceSuggestionsCached member to ItemInfo

3. Calling ItemInfo Implementation during Recognition

As mentioned in the Unconfirmed Face Count not being Updated section of Week 5-6, it’s not ideal to delegate full responsibility to the Categorization Role to get the information from the database. Hence, during Recognition, as a Face gets Recognized, I add it to the ItemInfo map.

Here are my commits for the same:

4. Modifying the Categorization Workflow

Categorization by Faces is more slightly more complicated than appears on first glance. All previous implementations of Categorization Roles were consistent in the sense that all Items being categorized appeared only once in the View. However, in the case of Faces, a single ItemInfo may appear multiple times, in the form of the various faces identified in the image.

When categorization is carried out, comparison calls are made using the ItemInfos. Hence, there needs to be a way to extract information about just a Face, and not the whole Image that it is a part of.

I implemented this in two parts:

4.1 Modify Function Signature for Comparison methods

The View in which the Faces appear, is an enhanced version of a QListView. The current implementation takes a Model Index, computes an ItemInfo and then calls sorting implementations. There already exists implementation, to extract information about a Face (if any) from a Model Index. Since each Face appears as a different Modal Index, the information extracted will be unique! Hence, I can pass in information about the Faces along with Information about ItemInfos to the Categorization Methods.

Here are my commits regarding the same:

- Implementation for compareInfosCategories with new Function Signature.

- Add Face Parameters to ItemAlbumFilterModel as well.

- Add Face Parameters to compare Info Categories, to allow Categorization by Faces.

- Implementation for compareInfosCategories with new Function Signature.

4.2 Use Information about Faces to get Suggested Names

Now that I have information about a single Face, I can extract information about the Region that the Face covers in the original Image. This was the reason why I chose to implement the SuggestedNames in ItemInfo as a Map of TagRegion -> Suggested Name. Now that I have Suggested Names for each Face in each Image, I can implement the Categorization Roles themselves!

Here are my commits regarding the same:

- Modify ItemFilterModel::compareCategories to extract Face information from the Model Index.

- Modify ItemFilterModel::data to extract Face Information from ModelIndex.

5. Implementing New Categorization Role

Item Categorization Categories are defined in ItemSortSettings. I first declared a new role “CategoryByFaces” in the ItemSortSettings::CategorizationMode enum. The implementation idea for this role was simple. Extract the Suggested Name for the given Face, and compare alphabetically with the other Face. This implementation is deciding the order in which the categories will appear, not the actual categorization itself.

Here are my commits regarding the same:

- Add new Categorization Role for Categorizing by Faces

- Implementation to Compare Face Categories Alphabetically

About Me

Hello there, my name is Kartik and I'm a Sophomore in Computer Science at Birla Institute of Technology and Science, Pilani. I'm passionate about Open Source and Music.