GSoC/2020/StatusReports/NghiaDuong: Difference between revisions

| Line 128: | Line 128: | ||

|} | |} | ||

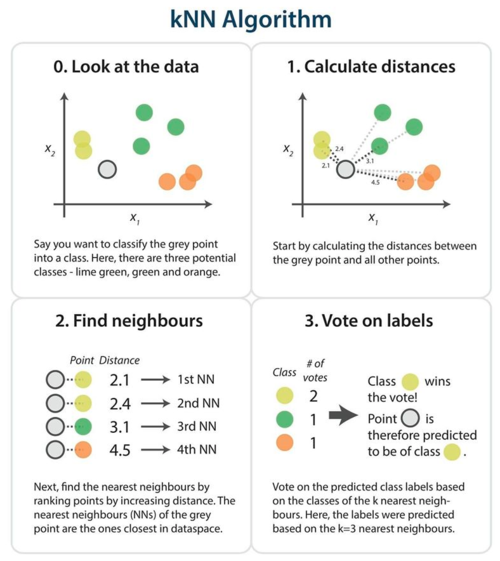

After several tests, the Machine K-Nearest neighbors method | After several tests, the Machine K-Nearest neighbors method appears to have superior performance than other methods. However, this method is based on statistics and therefore can have some biases. Therefore I want to try out the traditional K-Nearest neighbors with vote counting mechanism. With the help of KD-Tree, a binary search tree that can partition high dimensional vectors, the search for K-Nearest nodes to a given position becomes more efficient, with the time complexity of '''O(log(n))'''. | ||

======'''- Performances comparison'''====== | ======'''- Performances comparison'''====== | ||

Revision as of 22:56, 28 June 2020

Digikam : DNN based Faces Recognition Improvements

DigiKam is a famous open-source photo management software. With a huge effort, the developers of digiKam have implemented face detection and facial recognition features in a module called faces engine. This module implements different methods to scan faces and then label them based on the pre-tagged photos given by users.

Since last year, as a result of Thanh Trung Dinh's project during GSoC 2019, digiKam's faces engine has adopted new CNN based face processing methods. These methods have been proven to give a better performance than other traditional image processing methods implemented in digiKam. However, there still are some limitations in the current implementation of the faces engine, therefore the main goals of this project to continue Thanh Trung Dinh's works and improve the performance of digiKam's faces engine.

Mentors : Gilles Caulier, Maik Qualmann, Thanh Trung Dinh

Important Links

Project Proposal

Digikam DNN based Faces Recognition Improvements

GitLab development branch

gsoc20-facesengine-recognition

Contacts

Email: [email protected]

Github: MinhNghiaD

LinkedIn: https://www.linkedin.com/in/nghia-duong-2b5bbb15a/

Project Goals

The current goals of this project are to :

- Improve the accuracy of faces classifier

- Optimize the use of memory of faces engine

- Decrease storage space of faces engine

- Improve processing speed

- Re-structure faces engine architecture

- Port faces engines to Plugin architecture

Project Report

Community Bonding period (May 1 to May 31)

During this period, my main objective was to familiarize myself with the work of Thanh Trung Dinh, in order to evaluate the current implementation. After going through Thanh Trung Dinh's codes and final report, I have a better understanding of the current implementation of digiKam's faces engine. Generally, the architecture of DNN faces engine can be divided into 3 main parts:

- Face detector is in charge with face detection. This module gives users the option to choose between 2 prominent face detection algorithms: YOLOv3 and SSD-MobileNet. The faces detected shall be cropped then passed to Face recognizer.

- Face recognizer is in charge with face recognition process. It receives cropped face from the Face detector and applies face alignment then passed the preprocessed face image through the neural network. After GSoC 2019, the CNN algorithm used by digiKam is OpenFacev1 - an implementation of [FaceNet paper].

- Face database is in charge of database operations for the storage of functional data of digiKam's faces engine. This is the link between faces engine with digiKam application.

According to bug reports of digiKam's faces engine, the implementation of this module remains some problems that need to be addressed. The main problem reported in several bugs is that the performance of the faces engine decreases with the expansion of the data set. Therefore, for the rest of this period, I aimed to revaluates the exact state of different components of the faces engine. Because the 3 parts of the DNN version of faces engine are fully integrated into digiKam, it is difficult to evaluate the performance of each part without being added up more biases. In order to benchmark each component of the faces engine correctly, I created replicates of digiKam's Face detector and Face recognizer as stand-alone modules that apply the previously implemented DNN algorithms to solve their problems, without any link to digiKam database or digiKam core library. After that, I finally programmed the first sketch of 3 unit tests for Face detector and Face recognizer.

Here is my plan for the first 2 weeks of the coding period is to:

- Complete the unit tests for Face detector and Face recognizer.

- Search for the problems that cause the decrease of performance.

- Try out different methods for face classification.

- Compare the performances of these different techniques.

Coding period : Phase one (June 1 to June 29)

In this phase, my work mostly concentrated on building the unit tests and applying different classifier methods for Face recognizer. Throughout these tests, points that need to be improved were revealed, so as to improve a better and faster face recognition module.

June 1 to June 14 (Week 1 - 2) - Report of current state of digiKam's faces engine

DONE

- Unit test with GUI for Face Detector (YOLOv3 and SSD-MobileNet).

- Comparison of performance of YOLOv3 and SSD-MobileNet implementation in digiKam's faces engine.

- Unit test with GUI for Face Recognizer (OpenFacev1).

- Automatic unit test on large datasets to evaluate the performance of Face Recognizer.

- Evaluation of current recognition methods used by the faces engine.

TODO

- Add and test new recognition methods on Face recognizer in order to improve accuracy and processing speed.

- Compare the performances of these different methods.

- Debug detection errors of SSD-MobileNet.

- Speed up YOLOv3 processing time by using calculation distribution.

During these first weeks of GSoC 2020, I finalized my unit tests for 2 essential components of faces engine: Face detector and Face recognizer. The purpose of these tests is to understand the internal work of faces engine and the reasons for the degradation over time reported in bug reports.

- To verify the functionalities of Face detector, I built a test with GUI, in order to show the image matrices after each step of the face detection process. In this way, we can have a sense of what it is doing and then evaluate its performance.

- To verify the functionalities of Face recognizer, I built 2 tests. A test with GUI to reproduce the face suggesting process. Another test receives a dataset as arguments and split it into a training set and a test set then passes them to the Face recognizer, in order to evaluate its performance.

- Face Detector status

Currently, digiKam's faces engine employs 2 different CNN algorithms for face detection. One is YOLOv3 and the other is SSD-MobileNet. The performances of the implementation of these 2 algorithms are slightly different in digiKam. For each image, YOLOv3 scans 10600 bounding boxes and therefore it gives very high accuracy, but it takes about 400 ms on average, on each image. On another hand, SSD-MobileNet scans only 20 boxes for about 20 ms on each image and it gives a lower accuracy. The default method used by the faces engine is SSD-MobileNet, because of its lightweight and rapidity.

|

|

Although the implementation of SSD-MobileNet performs rather well on average use cases, where all faces are clear and can be easily detected, it still has some limitations that need to be addressed. In the example above, I performed face detection using YOLOv3 and SSD-MobileNet on the same image. In the figure on the left-hand side, the Face detector powered by YOLOv3 can detect most of the faces in the image. However, in the figure on the right-hand side, the Face detector powered by SSD-MobileNet cannot detect any face. Unfortunately, this problem with SSD-MobileNet constantly occurs in several images, usually in cases where the faces are small or the images are too dark. The cause of this low accuracy could be an error in the implementation of SSD-MobileNet in digiKam or an error in the neural network files. Either way, this problem needs to be correct in order to improve the performance of the Face detector.

However, the main scope of this project focuses to improve the Face Recognizer of digiKam. Therefore the works on the Face detector will be postponed to the end of the project.

- Face Recognizer status

After being detected by the Face detector, the face parts of the images are cropped and passed to Face Recognizer. Here, the face image passed through several steps to be recognized. First, the face image is transformed into Cv::Mat, and then scaled into a static ratio defined by Face recognizer. After that, the face is aligned based on the position of eyes, nose, and lip, before being passed through the Neural Network to output a 128-dimensional vector called face embedding. Finally, the output face embedding is compared with registered faces to predict the corresponding identity.

The current classifying method used by digiKam based on cosine distance of face embeddings. The greater the cosine of the angle between 2 vectors, the more similar 2 faces are. In order to predict the label of a face, digiKam's Face recognizer calculates the mean of cosine distance of a face to pre-registered face embeddings of each group of labels. The Face recognizer then picks the highest mean distance that greater a certain threshold as its prediction.

To examine the result of each step, I implemented a unit test with GUI as an extension of the unit test for the Face detector. This test displayed the transformation of face images through the recognition process, and it includes a simple control panel for testers to perform a simple recognition work-flow. This test gives an intuition of what the Recognizer is doing and therefore facilitates debugging processes.

In addition to this test, I implemented a performance test for Face recognizer. This performance test receives a face dataset and a train/test ratio as inputs. The test splits the dataset after the split ratio, the training set will be registered with its labels by the Face recognizer, and the test set will be used to perform the verification of the facial recognition process. The splitting step is completely random to ensure the integrity of the test. With the help of this test, I can evaluate the correct performance (accuracy and speed) of digiKam's Face recognizer.

At first, I applied the performance test on the Yalefaces dataset, which contains 166 pre-labeled face images. On this small dataset, the performance of the mean cosine distance method is rather well, 88.8889 % accuracy at speed 75.3333 ms/face, with threshold 0.7 on a total of 121 training faces, and 45 test faces. However, when I perform the same test on Extended Yale B data set, which contains 16380 pre-labeled face images, the accuracy shrank to 0 %. To be specific, the accuracy of the mean cosine distance method is O % accuracy at speed 626.485 ms/face, with threshold 0.7 on a total of 11469 training faces and 4911 test faces. The main reason for this poor accuracy is because it fails to recognized face due to a small mean cosine distance. This problem is the same problem that appeared in several bug reports.

This degradation of Face recognizer dues to the lack of adaptivity of the mean cosine distance method to a big dataset. Because of the nature of this method, when the data related to an entity becomes greater, the dispersion of data makes the mean distance smaller. The calculation of mean cosine distance is exhaustive, its time complexity increases linearly with the size of the data. Therefore, the more data it gets, the poorer performance it is. Furthermore, due to the mathematical nature of the cosine function, the partition capacity of this method is limited. In general, data classification is to find a way to partition data into different groups. Because cos(x) : [0°, 180°] --> [-1,1] is injective, the vectors limited by a cone of 30° is partitioned into the same group, even with a high threshold of 0.86. Therefore, the more labels we have, the more collisions occur between these data partitions.

June 15 to June 29 (Week 3 - 4) - 84% accuracy and 104.804 ms/face speed on the Extended Yale B dataset

DONE

- Apply Machine Learning classifiers on Face recognizer.

- Accuracy improvement from 0% to 84% on the Extended Yale B dataset.

- Processing speed improvement from 671.449 ms/face to 104.804 ms/face on the Extended Yale B dataset.

- High dimensional data partitioning with KD-Tree.

- Implementation of online learning in Face recognizer.

- First sketch of database model for Face recognizer.

TODO

- Fully integrate new improvements to the faces engine.

- Reorganize the databases of the faces engine.

- Apply map-reduce to distribute the calculations on multiple threads.

- Port faces engine to plug-in architecture

- Test UMAP Dimensionality reduction algorithm to have an insight into the global structure of face embedding.

As stated in the previous section, the mean cosine distance is not fit for a face classifier. Therefore during these 2 weeks, I focused on implementing new classification methods and compared their performances.

- New face classifiers

OpenFace trained their convolutional neural network by optimizing the triplet loss of Euclidian distances between face embeddings. This optimization ensures face embeddings belong to the same person have a close distance, and in contrast in the case of 2 different persons. Because of this property, I tried several classification methods that can distinguish vector representations. Here is the list of classification methods that I have tried during this period:

- Closest Cosine distance,

- Closest Euclidian distance,

- Support vector machine with linear kernel,

- Machine Learning K-Nearest neighbors,

- Traditional K-Nearest neighbors with KD-Tree.

After several tests, the Machine K-Nearest neighbors method appears to have superior performance than other methods. However, this method is based on statistics and therefore can have some biases. Therefore I want to try out the traditional K-Nearest neighbors with vote counting mechanism. With the help of KD-Tree, a binary search tree that can partition high dimensional vectors, the search for K-Nearest nodes to a given position becomes more efficient, with the time complexity of O(log(n)).

- Performances comparison

After integrated news face classifier methods to digiKam's Face recognizer, the results of performance tests of these methods on Extended Yale B dataset are indicated in the table below:

| Closest Cosine distance | Closest Euclidian distance | SVM | Machine learning KNN | KD-Tree KNN | |

|---|---|---|---|---|---|

| Accuracy | Example | Example | Example | Example | Example |

| Speed | Example | Example | Example | Example | Example |