KDE PIM/Meetings/Osnabrueck 5

The annual KDE PIM Meeting Osnabrück 5 took place at the Intevation offices in Osnabrück from Friday, January 12th 2007, to Monday, January 15th.

Agenda

Friday

9:00 Arrival

17:00 Kickoff Meeting: Status KDE PIM and Akonadi, Perspectives for KDE 4 and beyond

18:15 Status of Akonadi

20:30 Dinner

Saturday

9:00 Hacking

10:00 Community Relations, Website

14:00 Kolab Enterprise Branch

17:00 Presentation: Akonadi

17:45 Presentation: Mailody

18:15 Presentation: Proko

18:30 KDE4 Status Discussion

20:00 Dinner

Sunday

9:00 Hacking

10:30 Maintainance

11:15 Introduction to Qt Model/View Programming

13:00 Lunch

15:00 Further Introduction to Qt Model/View Programming

19:00 Dinner

Monday

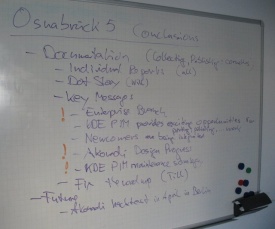

10:00 Round-up meeting: Results and conclusions

11:00 Departure

Meeting Notes

Akonadi Status Before the Meeting

Server

More or less implemented features:

- storage of items consisting of identifiers, mimetype, parent collection and an arbitrary (binary) data block

- IMAP-like data access interface supporting retrieval and modifications of both collections and PIM items

- D-Bus notification signals for changes to items or collections

- auto-generated low-level database access code

- uses transactions for all database write operations

Problems and missing features:

- cache mangement, expiring, filtering

- sqlite backend can't handle massive concurrent access, MySQL/Embedded looks like a interessting options but doesn't work either (might be a QSQL driver bug)

- communication with resources and search providers only works because of a huge hack which makes D-Bus calls from threads possible

Client Library

More or less implemented features:

- classes to represent collections and pim items

- job classes to retrieve and modify collection and pim items

- a self-updating collection tree model

- a self-updating flat pim item model

- a notification filter

Resources

We have a simple read-only iCal file resource. For more complex resource we probably need some more work on the resource API. Resources also have the same problem with async IPC complexity as search providers (see below).

Search Provider

Currently the biggest missing piece, there are still open questions about the design.

Search providers are supposed to provide type specific functionality, needed for the following features:

- initial creation of virtual folders (searching)

- updating existing virtual folders (matching a single item against all existing queries)

- generate type specific FETCH responses (eg. the mail header listing)

There are a couple of things making this complex:

- some resources (eg. IMAP, LDAP) provide search capabilities itself but not necessarily for all fields/operators

- some fields/operators are type specific, some are not; some are handled by the storage itself (eg. mimetype, collection), some by generic external tools (eg. fulltext search with strigi), some by the type specific search providers

- very complex fields (eg. event recurrence)

Since we probably don't want to write our own query evaluation engine,

currently the best option seems to be to use Nepomuk for that. It is

supposed to store the meta data of everything on the desktop, so putting

PIM data in there is needed anyway. Its query language seems to have

enough expressive power for our needs, but the performance has still to

be evaluated. The Nepomuk developers are very interesseted in cooperating

with us here.

There are also technical problems. First experiments at aKademy showed problems with async IPC complexity, the current solution of delayed D-Bus replies and multi-threading needs either to be simplified or completely hidden behind the search provider API.

Development Tools

There are three applications to access the storage backend:

- the akonadi command line client, but it is still missing several commands (some of them are available in the libakonadi tests)

- akonadiconsole contains a folder tree and complete log of network and D-Bus communication

- KMail via IMAP

Of course there is also netcat localhost 4444 and raw IMAP ;-)

General / Project Management

Given the current progress and our ambitious goals it seems unlikely that we will finish Akonadi and port all applications in time for KDE 4.0 (whenever that will be), especially when considering that Akonadi development also draws badly needed resources from KDE PIM development.

Therefore we might want to concentrate on getting only a subset of Akonadi ready and used for 4.0 (eg. just the storage, no searching), keeping KDE PIM features on the 3.5 level where possible and implement additional capabilities for the following 4.x releases.

Akonadi Results

Server

- mysql/embedded problem no longer reproducable, backup solution with per-application network-less mysqld exists but is not implemented yet

- check licensing implications of mysql

- Simon promised to fix D-Bus calls from threads (which is needed to use strigi/nepomuk as well)

Change notifications / update loops

- solved and implemented for write operations (very important for resources)

- not implemented for FETCH, solution is complex: extension of the delivery queue, notification blacklists, session id vs. resource id in notification blacklists

Synchronous server operations for "online" resources

- needed for FETCH/LIST (really??), implemented expect notification loop prevention

- two options: do it for all other operations as well vs. do all other operations async

- a complete sync implementation would be too complex (same problems as with FETCH for every command, resource D-Bus API would be the same as the IMAP command API), consider the online case as a special case of a offline resource instead (ie. a cached resource that syncs immediately)

Error and Status Notification

- identify jobs via a session identifier and a job identifier

- send status and error notification via D-Bus signals

- has similar problems as change notifications, depends on sync/async semantics of write operations

PIM Item Data Structure

- Use a strictly increasing revision number for optimistic locking

- Split data into a tree-like structure where appropriate (think IMAP BODYSTRUCTURE)

- Add extra fields for special FETCH responses (think ENVELOPE)

- No type-specific code in the backend required for partial data retrieval, all item parsing happens in the resource

- XML or MIME based format for communication between storage and resources/agents

- Store bodyparts separately in the database, the raw data optionally in the filesystem (requires extra work for transaction safety)

Search

- Akonadi does not search itself

- we feed every data we get into strigi/nepomuk, eg. by writing stand-alone feeder apps (formerly known as search providers) or by writing/extending the corresponding strigi/nepomuk plugin

- Akonadi should support self-updating virtual folders (if necessary storing the result ids), by sending the query to all involved resources and strigi/nepomuk and merging the results

- updating virtual folders need still to be discussed

Server-Side Collection Handling

- switch from path with user visible to unique ids for identifying collections

- do not store complete path but a pointer to parent collection

- will completely break the IMAP LIST command -> rename and keep the option for a IMAP-compatible LIST command

Model/View

- our models contain still lots of stuff which Till told us we shouldn't do, ie. use QModelIndex only for the QAbstractItemView model, solve everything else via data()

General

- KDE dependencies are allowed for now to safe work, but avoid KDE dependencies where possible

- next Akonadi meeting in Berlin in April

David's Report

It was good to meet the other developers so as to put faces to the names which I'd previously only encountered on the mailing lists. I now feel more part of the team - about time, since I've been involved in KDE PIM for over 5 years. Getting a better perspective on the issues affecting KDE PIM as a whole (particularly Akonadi) was also very useful.

It was extremely useful technically. The biggest benefit was the session on the Qt 4 model-view framework which gave me enough understanding to go ahead and start converting KAlarm to use it. My initial partial implementation actually displayed some data! And it should enable some additional features to be coded very easily compared to the Qt 3 way of doing things. I've seen the light, halleluiah. :-)

Being able to easily ask questions of other people also contributed to finally getting D-Bus to work in KAlarm.

It also emerged in the meeting that in relation to KDE 4 conversion, KAlarm is in a similar state to most of the other KDE PIM applications. Namely that there is a lot to be done, but it isn't necessary to wait for Akonadi before doing it. It makes me feel a lot more positive about getting on with the conversion, which makes that small part of KDE that much more likely to be ready for the KDE 4 release.

Impressions

|

|

| Mailody | Proko |

|

|

| Hacking | Conclusions |

Press Coverage