Guidelines and HOWTOs/UnitTests: Difference between revisions

(You need "include(KDE4Defaults)" in CMakeLists.txt for the test make target to be generated.) |

Gjditchfield (talk | contribs) (Mention ecm_add_tests, link to ECM documentation.) |

||

| (43 intermediate revisions by 24 users not shown) | |||

| Line 1: | Line 1: | ||

: '''Author:''' Brad Hards, Sigma Bravo Pty Limited | |||

'''Author:''' Brad Hards, Sigma Bravo Pty Limited | |||

== Abstract == | == Abstract == | ||

This article provides guidance on writing unittests for | This article provides guidance on writing unittests for software based on Qt | ||

and KDE frameworks. It uses the [https://doc.qt.io/qt-5/qttest-index.html QtTestLib framework] provided starting with Qt 4.1. It provides an introduction to the ideas behind unit testing, tutorial material on the [https://doc.qt.io/qt-5/qttest-index.html QtTestLib framework], and suggestions for getting the most value for your effort. | |||

== About Unit Testing == | == About Unit Testing == | ||

A unit test is a test that checks the functionality, behaviour and correctness of a single software component. In | A unit test is a test that checks the functionality, behaviour and correctness of a single software component. In Qt code unit tests are almost always used to test a single C++ class (although testing a macro or C function is also possible). | ||

Unit tests are a key part of Test Driven Development, however they are useful for all software development processes. It is not essential that all of the code is covered by unit tests (although that is obviously very desirable!). Even a single test is a useful step to improving code quality. | Unit tests are a key part of Test Driven Development, however they are useful for all software development processes. It is not essential that all of the code is covered by unit tests (although that is obviously very desirable!). Even a single test is a useful step to improving code quality. | ||

| Line 24: | Line 22: | ||

==About QtTestLib== | ==About QtTestLib== | ||

QtTestlib is a lightweight testing library developed by | QtTestlib is a lightweight testing library developed by the Qt Project and released under the LGPL (a commercial version is also available, for those who need alternative licensing). It is written in C++, and is cross-platform. It is provided as part of the tools included in Qt. | ||

In addition to normal unit testing capabilities, QtTestLib also offers basic GUI testing, based on sending QEvents. This allows you to test GUI widgets, but is not generally suitable for testing full applications. | In addition to normal unit testing capabilities, QtTestLib also offers basic GUI testing, based on sending QEvents. This allows you to test GUI widgets, but is not generally suitable for testing full applications. | ||

| Line 32: | Line 30: | ||

== Tutorial 1: A simple test of a date class == | == Tutorial 1: A simple test of a date class == | ||

In this tutorial, we will build a simple test for a class that represents a date, using QtTestLib as the test framework. To avoid too much detail on how the date class works, we'll just use the QDate | In this tutorial, we will build a simple test for a class that represents a date, using QtTestLib as the test framework. To avoid too much detail on how the date class works, we'll just use the QDate class that comes with Qt. In a normal unittest, you would more likely be testing code that you've written yourself. | ||

The code below is the entire testcase. | The code below is the entire testcase. | ||

| Line 38: | Line 36: | ||

'''Example 1. QDate test code''' | '''Example 1. QDate test code''' | ||

< | <syntaxhighlight lang="cpp-qt" line="GESHI_NORMAL_LINE_NUMBERS"> | ||

#include < | #include <QTest> | ||

#include < | #include <QDate> | ||

class testDate: public QObject | class testDate: public QObject | ||

| Line 61: | Line 59: | ||

// 11 March 1967 | // 11 March 1967 | ||

QDate date; | QDate date; | ||

date. | date.setDate( 1967, 3, 11 ); | ||

QCOMPARE( date.month(), 3 ); | QCOMPARE( date.month(), 3 ); | ||

QCOMPARE( QDate::longMonthName(date.month()), | QCOMPARE( QDate::longMonthName(date.month()), | ||

| Line 70: | Line 68: | ||

QTEST_MAIN(testDate) | QTEST_MAIN(testDate) | ||

#include "tutorial1.moc" | #include "tutorial1.moc" | ||

</ | </syntaxhighlight> | ||

Save as autotests/tutorial1.cpp in your project's autotests directory following the example of [https://invent.kde.org/graphics/okular Okular] | |||

Stepping through the code, the first line imports the header files for the QtTest namespace. The second line imports the headers for the | Stepping through the code, the first line imports the header files for the QtTest namespace. The second line imports the headers for the QDate class. Lines 4 to 10 give us the test class, testData. Note that testDate inherits from QObject and has the Q_OBJECT macro - QtTestLib requires specific Qt functionality that is present in QObject. | ||

Lines 12 to 17 provide our first test, which checks that a date is valid. Note the use of the QVERIFY macro, which checks that the condition is true. So if date.isValid() returns true, then the test will pass, otherwise the test will fail. QVERIFY is similar to ASSERT in other test suites. | Lines 12 to 17 provide our first test, which checks that a date is valid. Note the use of the QVERIFY macro, which checks that the condition is true. So if <tt>date.isValid()</tt> returns true, then the test will pass, otherwise the test will fail. QVERIFY is similar to ASSERT in other test suites. | ||

Similarly, lines 19 to 27 provide another test, which checks a setter, and a couple of accessor routines. In this case, we are using QCOMPARE, which checks that the conditions are equal. So if date.month() returns 3, then that part of that test will pass, otherwise the test will fail. | Similarly, lines 19 to 27 provide another test, which checks a setter, and a couple of accessor routines. In this case, we are using QCOMPARE, which checks that the conditions are equal. So if date.month() returns 3, then that part of that test will pass, otherwise the test will fail. | ||

{{Warning|As soon as a QVERIFY evaluates to false or a QCOMPARE does not have two equal values, the whole test is marked as failed and the next test will be | {{Warning|As soon as a QVERIFY evaluates to false or a QCOMPARE does not have two equal values, the whole test is marked as failed and the next test will be started. So in the example above, if the check at line 24 fails, then the check at lines 25 and 26 will not be run.}} | ||

In a later tutorial we will see how to work around problems that this behaviour can cause. | In a later tutorial we will see how to work around problems that this behaviour can cause. | ||

| Line 84: | Line 83: | ||

Line 30 uses the QTEST_MAIN which creates an entry point routine for us, with appropriate calls to invoke the testDate unit test class. | Line 30 uses the QTEST_MAIN which creates an entry point routine for us, with appropriate calls to invoke the testDate unit test class. | ||

Line 31 includes the Meta-Object compiler output, so we can make use of our | Line 31 includes the Meta-Object compiler output, so we can make use of our QObject functionality. | ||

The qmake project file that corresponds to that code is shown below. You would then use qmake to turn this into a Makefile and then compile it with make. | The qmake project file that corresponds to that code is shown below. You would then use qmake to turn this into a Makefile and then compile it with make. | ||

'''Example 2. QDate unit test project''' | '''Example 2. QDate unit test project''' | ||

< | <pre> | ||

CONFIG += qtestlib | CONFIG += qtestlib | ||

TEMPLATE = app | TEMPLATE = app | ||

| Line 98: | Line 97: | ||

# Input | # Input | ||

SOURCES += tutorial1.cpp | SOURCES += tutorial1.cpp | ||

</ | </pre> | ||

Save as tutorial1.pro | |||

This is a fairly normal project file, except for the addition of the < | This is a fairly normal project file, except for the addition of the <tt>CONFIG += qtestlib</tt>. This adds the right header and library setup to the Makefile. | ||

Create an empty file called tutorial1.h and compile with <tt>qmake; make</tt> The output looks like the following: | |||

'''Example 3. QDate unit test output''' | '''Example 3. QDate unit test output''' | ||

< | <pre> | ||

$ ./tutorial1 | $ ./tutorial1 | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 115: | Line 115: | ||

Totals: 4 passed, 0 failed, 0 skipped | Totals: 4 passed, 0 failed, 0 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

Looking at the output above, you can see that the output includes the version of the test library and Qt itself, and then the status of each test that is run. In addition to the testValidity and testMonth tests that we defined, there is also a setup routine (initTestCase) and a teardown routine (cleanupTestCase) that can be used to do additional configuration if required. | Looking at the output above, you can see that the output includes the version of the test library and Qt itself, and then the status of each test that is run. In addition to the testValidity and testMonth tests that we defined, there is also a setup routine (initTestCase) and a teardown routine (cleanupTestCase) that can be used to do additional configuration if required. | ||

| Line 124: | Line 124: | ||

'''Example 4. QDate unit test output showing failure''' | '''Example 4. QDate unit test output showing failure''' | ||

< | <pre> | ||

$ ./tutorial1 | $ ./tutorial1 | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 137: | Line 137: | ||

Totals: 3 passed, 1 failed, 0 skipped | Totals: 3 passed, 1 failed, 0 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

===Running selected tests=== | ===Running selected tests=== | ||

| Line 145: | Line 145: | ||

'''Example 5. QDate unit test output - selected function''' | '''Example 5. QDate unit test output - selected function''' | ||

< | <pre> | ||

$ ./tutorial1 testValidity | $ ./tutorial1 testValidity | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 154: | Line 154: | ||

Totals: 3 passed, 0 failed, 0 skipped | Totals: 3 passed, 0 failed, 0 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

Note that the initTestCase and cleanupTestCase routines are always run, so that any necessary setup and cleanup will still be done. | Note that the initTestCase and cleanupTestCase routines are always run, so that any necessary setup and cleanup will still be done. | ||

| Line 161: | Line 161: | ||

'''Example 6. QDate unit test output - listing functions''' | '''Example 6. QDate unit test output - listing functions''' | ||

< | <pre> | ||

$ ./tutorial1 -functions | $ ./tutorial1 -functions | ||

testValidity() | testValidity() | ||

testMonth() | testMonth() | ||

</ | </pre> | ||

===Verbose output options=== | ===Verbose output options=== | ||

| Line 173: | Line 173: | ||

'''Example 7. QDate unit test output - verbose output''' | '''Example 7. QDate unit test output - verbose output''' | ||

< | <pre> | ||

$ ./tutorial1 -v1 | $ ./tutorial1 -v1 | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 187: | Line 187: | ||

Totals: 4 passed, 0 failed, 0 skipped | Totals: 4 passed, 0 failed, 0 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

The -v2 option shows each QVERIFY, QCOMPARE and QTEST, as well as the message on entering each test function. I found this useful for verifying that a particular step is being run. This is shown below: | The -v2 option shows each QVERIFY, QCOMPARE and QTEST, as well as the message on entering each test function. I found this useful for verifying that a particular step is being run. This is shown below: | ||

| Line 193: | Line 193: | ||

''''Example 8. QDate unit test output - more verbose output''' | ''''Example 8. QDate unit test output - more verbose output''' | ||

< | <pre> | ||

$ ./tutorial1 -v2 | $ ./tutorial1 -v2 | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 213: | Line 213: | ||

Totals: 4 passed, 0 failed, 0 skipped | Totals: 4 passed, 0 failed, 0 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

The -vs option shows each signal that is emitted. In our example, there are no signals, so -vs has no effect. Getting a list of signals is useful for debugging failing tests, especially GUI tests which we will see in the third tutorial. | The -vs option shows each signal that is emitted. In our example, there are no signals, so -vs has no effect. Getting a list of signals is useful for debugging failing tests, especially GUI tests which we will see in the third tutorial. | ||

| Line 228: | Line 228: | ||

'''Example 9. QDate test code, data driven version''' | '''Example 9. QDate test code, data driven version''' | ||

< | <syntaxhighlight lang="cpp-qt" line="GESHI_NORMAL_LINE_NUMBERS"> | ||

#include <QtTest> | #include <QtTest> | ||

#include <QtCore> | #include <QtCore> | ||

| Line 270: | Line 270: | ||

QDate date; | QDate date; | ||

date. | date.setDate( year, month, day); | ||

QCOMPARE( date.month(), month ); | QCOMPARE( date.month(), month ); | ||

QCOMPARE( QDate::longMonthName(date.month()), monthName ); | QCOMPARE( QDate::longMonthName(date.month()), monthName ); | ||

| Line 278: | Line 278: | ||

QTEST_MAIN(testDate) | QTEST_MAIN(testDate) | ||

#include "tutorial2.moc" | #include "tutorial2.moc" | ||

</ | </syntaxhighlight> | ||

As you can see, we've introduced a new method - testMonth_data, and moved the specific test date out of testMonth. We've had to add some more code (which will be explained soon), but the result is a separation of the data we are testing, and the code we are using to test it. | As you can see, we've introduced a new method - testMonth_data, and moved the specific test date out of testMonth. We've had to add some more code (which will be explained soon), but the result is a separation of the data we are testing, and the code we are using to test it. | ||

The names of the functions are important - you must use the _data suffix for the data setup routine, and the first part of the data setup routine must match the name of the driver routine. | The names of the functions are important - you must use the _data suffix for the data setup routine, and the first part of the data setup routine must match the name of the driver routine. | ||

It is useful to visualise the data as being a table, where the columns are the various data values required for a single run through the driver, and the rows are different runs. In our example, there are four columns (three integers, one for each part of the date; and one QString ), added in lines | It is useful to visualise the data as being a table, where the columns are the various data values required for a single run through the driver, and the rows are different runs. In our example, there are four columns (three integers, one for each part of the date; and one QString ), added in lines 23 through 30. The addColumn template obviously requires the type of variable to be added, and also requires a variable name argument. We then add as many rows as required using the newRow function, as shown in lines 23 through 26. The string argument to newRow is a label, which is handy for determining what is going on with failing tests, but doesn't have any effect on the test itself. | ||

To use the data, we simply use QFETCH to obtain the appropriate data from each row. The arguments to QFETCH are the type of the variable to fetch, and the name of the column (which is also the local name of the variable it gets fetched into). You can then use this data in a QCOMPARE or QVERIFY check. The code is run for each row, which you can see below: | To use the data, we simply use QFETCH to obtain the appropriate data from each row. The arguments to QFETCH are the type of the variable to fetch, and the name of the column (which is also the local name of the variable it gets fetched into). You can then use this data in a QCOMPARE or QVERIFY check. The code is run for each row, which you can see below: | ||

| Line 289: | Line 289: | ||

'''Example 10. Results of data driven testing, showing QFETCH''' | '''Example 10. Results of data driven testing, showing QFETCH''' | ||

< | <pre> | ||

$ ./tutorial2 -v2 | $ ./tutorial2 -v2 | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 317: | Line 317: | ||

Totals: 4 passed, 0 failed, 0 skipped | Totals: 4 passed, 0 failed, 0 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

=== The QTEST macro === | === The QTEST macro === | ||

| Line 325: | Line 325: | ||

'''Example 11. QDate test code, data driven version using QTEST''' | '''Example 11. QDate test code, data driven version using QTEST''' | ||

< | <syntaxhighlight lang="cpp-qt" line="GESHI_NORMAL_LINE_NUMBERS"> | ||

void testDate::testMonth() | void testDate::testMonth() | ||

{ | { | ||

| Line 333: | Line 333: | ||

QDate date; | QDate date; | ||

date. | date.setDate( year, month, day); | ||

QCOMPARE( date.month(), month ); | QCOMPARE( date.month(), month ); | ||

QTEST( QDate::longMonthName(date.month()), "monthName" ); | QTEST( QDate::longMonthName(date.month()), "monthName" ); | ||

} | } | ||

</ | </syntaxhighlight> | ||

In the example above, note that | In the example above, note that monthName is enclosed in quotes, and we no longer have a QFETCH call for monthName. | ||

The other QCOMPARE could also have been converted to use QTEST, however this would be less efficient, because we already needed to use QFETCH to get month for the | The other QCOMPARE could also have been converted to use QTEST, however this would be less efficient, because we already needed to use QFETCH to get month for the setDate in the line above. | ||

===Running selected tests with selected data=== | ===Running selected tests with selected data=== | ||

| Line 348: | Line 348: | ||

'''Example 12. QDate unit test output - selected function and data''' | '''Example 12. QDate unit test output - selected function and data''' | ||

< | <pre> | ||

$ ./tutorial2 -v2 testMonth:1967/3/11 | $ ./tutorial2 -v2 testMonth:1967/3/11 | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 364: | Line 364: | ||

Totals: 3 passed, 0 failed, 0 skipped | Totals: 3 passed, 0 failed, 0 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

==Tutorial 3: Testing Graphical User Interfaces== | ==Tutorial 3: Testing Graphical User Interfaces== | ||

| Line 372: | Line 372: | ||

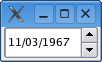

Again, we'll use an existing class as our test environment, and again it will be date related - the standard Qt {{qt|QDateEdit}} class. For those not familiar with this class, it is a simple date entry widget (although with some powerful back end capabilities). A picture of the widget is shown below. | Again, we'll use an existing class as our test environment, and again it will be date related - the standard Qt {{qt|QDateEdit}} class. For those not familiar with this class, it is a simple date entry widget (although with some powerful back end capabilities). A picture of the widget is shown below. | ||

[[Image:Qdateedit_dlg.png| | [[Image:Qdateedit_dlg.png|Thumb|'''Figure 1. QDateEdit widget screenshot''']] | ||

The way QtTestLib provides GUI testing is by injecting {{qt|QInputEvent}} events. To the application, these input events appear the same as normal key press/release and mouse clicks/drags. However the mouse and keyboard are unaffected, so that you can continue to use the machine normally while tests are being run. | The way QtTestLib provides GUI testing is by injecting {{qt|QInputEvent}} events. To the application, these input events appear the same as normal key press/release and mouse clicks/drags. However the mouse and keyboard are unaffected, so that you can continue to use the machine normally while tests are being run. | ||

| Line 380: | Line 380: | ||

'''Example 13. QDateEdit test code''' | '''Example 13. QDateEdit test code''' | ||

< | <syntaxhighlight lang="cpp-qt" line="GESHI_NORMAL_LINE_NUMBERS"> | ||

#include <QtTest> | #include <QtTest> | ||

#include <QtCore> | #include <QtCore> | ||

| Line 456: | Line 456: | ||

QTEST_MAIN(testDateEdit) | QTEST_MAIN(testDateEdit) | ||

#include "tutorial3.moc" | #include "tutorial3.moc" | ||

</ | </syntaxhighlight> | ||

Much of this code is common with previous examples, so I'll focus on the new elements and the more important changes as we work through the code line-by-line. | Much of this code is common with previous examples, so I'll focus on the new elements and the more important changes as we work through the code line-by-line. | ||

| Line 464: | Line 464: | ||

Line 4 is a macro that is required for the data-driven part of this test, which I'll come to soon. | Line 4 is a macro that is required for the data-driven part of this test, which I'll come to soon. | ||

Lines | Lines 6 to 13 declare the test class - while the names have changed, it is pretty similar to the previous example. Note the testValidator and testValidator_data functions - we will be using data driven testing again in this example. | ||

Our first real test starts in line | Our first real test starts in line 15. Line 18 creates a QDate, and line 19 uses that date as the initial value for a QDateEdit widget. | ||

Lines | Lines 22 and 23 show how we can test what happens when we press the up-arrow key. The QTest::keyClick function takes a pointer to a widget, and a symbolic key name (a char or a Qt::Key). At line 23, we check that the effect of that event was to increment the date by a day. The QTest:keyClick function also takes an optional keyboard modifier (such as Qt::ShiftModifier for the shift key) and an optional delay value (in milliseconds). As an alternative to using QTest::keyClick, you can use QTest::keyPress and QTest::keyRelease to construct more complex keyboard sequences. | ||

Lines | Lines 27 to 33 show a similar test to the previous one, but in this case we are simulating a mouse click. We need to click in the lower right hand part of the widget (to hit the decrement arrow - see Figure 1), and that requires knowing how large the widget is. So lines 27 and 28 calculate the correct point based off the size of the widget. Line 30 (and the identical line 31) simulates clicking with the left-hand mouse button at the calculated point. The arguments to Qt::mouseClick are: | ||

*a pointer to the widget that the click event should be sent to. | *a pointer to the widget that the click event should be sent to. | ||

| Line 480: | Line 480: | ||

In addition to QTest::mouseClick, there is also QTest::mousePress, QTest::mouseRelease, QTest::mouseDClick (providing double-click) and QTest::mouseMove. The first three are used in the same way as QTest::mouseClick. The last takes a point to move the mouse to. You can use these functions in combination to simulate dragging with the mouse. | In addition to QTest::mouseClick, there is also QTest::mousePress, QTest::mouseRelease, QTest::mouseDClick (providing double-click) and QTest::mouseMove. The first three are used in the same way as QTest::mouseClick. The last takes a point to move the mouse to. You can use these functions in combination to simulate dragging with the mouse. | ||

Lines | Lines 35 and 36 show another approach to keyboard entry, using the QTest::keyClicks. Where QTest::keyClick sends a single key press, QTest::keyClicks takes a QString (or something equivalent, in line 35 a character array) that represents a sequence of key clicks to send. The other arguments are the same. | ||

Lines | Lines 38 to 40 show how you may need to use a combination of functions. After we've entered a new date in line 35, the cursor is at the end of the widget. At line 38, we use a Shift-Tab combination to move the cursor back to the month value. Then at line 39 we enter a new month value. Of course we could have used individual calls to QTest::keyClick, however that wouldn't have been as clear, and would also have required more code. | ||

=== Data-driven GUI testing === | === Data-driven GUI testing === | ||

Lines | Lines 61 to 72 show a data-driven test - in this case we are checking that the validator on QDateEdit is performing as expected. This is a case where data-driven testing can really help to ensure that things are working the way they should. | ||

At lines | At lines 63 to 65, we fetch in an initial value, a series of key-clicks, and an expected result. These are the columns that are set up in lines 47 to 49. However note that we are now pulling in a QDate, where in previous examples we used three integers and then build the QDate from those. However QDate isn't a registered type for {{qt|QMetaType}}, and so we need to register it before we can use it in our data-driven testing. This is done using the Q_DECLARE_METATYPE macro in line 4 and the qRegisterMetaType function in line 45. | ||

Lines | Lines 51 to 57 add in a couple of sample rows. Lines 51 to 53 represent a case where the input is valid, and lines 55 to 57 are a case where the input is only partly valid (the day part). A real test will obviously contain far more combinations than this. | ||

Those test rows are actually tested in lines | Those test rows are actually tested in lines 67 to 71. We construct the QDateEdit widget in line 67, using the initial value. We then send an Enter key click in line 69, which is required to get the widget into edit mode. At line 70 we simulate the data entry, and at line 71 we check whether the results are what was expected. | ||

Lines | Lines 74 and 75 are the same as we've seen in previous examples. | ||

=== Re-using test elements === | === Re-using test elements === | ||

| Line 505: | Line 505: | ||

'''Example 14. QDateEdit test code, using QTestEventList''' | '''Example 14. QDateEdit test code, using QTestEventList''' | ||

< | <syntaxhighlight lang="cpp-qt" line="GESHI_NORMAL_LINE_NUMBERS"> | ||

#include <QtTest> | #include <QtTest> | ||

#include <QtCore> | #include <QtCore> | ||

| Line 595: | Line 595: | ||

QTEST_MAIN(testDateEdit) | QTEST_MAIN(testDateEdit) | ||

#include "tutorial3a.moc" | #include "tutorial3a.moc" | ||

</ | </syntaxhighlight> | ||

This example is pretty much the same as the previous version, up to line | This example is pretty much the same as the previous version, up to line 28. In line 30, we create a QTestEventList. We add events to the list in lines 31 and 32 - note that we don't specify the widget we are calling them on at this stage. In line 34, we simulate each event on the widget. If we had multiple widgets, we could call simulate using the same set of events. | ||

Lines | Lines 35 to 39 are as per the previous example. | ||

We create another list in lines | We create another list in lines 41 to 43, although this time we are using addKeyClick and addKeyClicks instead of adding mouse events. Note that an event list can contain combinations of mouse and keyboard events - it just didn't make sense in this test to have such a combination. We use the second list at line 44, and check the results in line 45. | ||

You can also build lists of events in data driven testing as well, as shown in lines | You can also build lists of events in data driven testing as well, as shown in lines 48 to 86. The key difference is that instead of fetching a QString in each row, we are fetching a QTestEventList. This requires that we add a column of QTestEventList, rather than QString (see line 53). At lines 56 to 59, we create a list of events. At line 62 we add those events to the applicable row. We create a second list at lines 65 to 67, and add that second list to the applicable row in line 70. | ||

We fetch the events in line | We fetch the events in line 78, and use them in line 83. If we had multiple widgets, then we could use the same event list several times. | ||

==Tutorial 4 - Testing for failure and avoiding tests== | ==Tutorial 4 - Testing for failure and avoiding tests== | ||

| Line 620: | Line 620: | ||

'''Example 15. Unit test showing skipped tests''' | '''Example 15. Unit test showing skipped tests''' | ||

< | <syntaxhighlight lang="cpp-qt" line="GESHI_NORMAL_LINE_NUMBERS"> | ||

void testDate::testSkip_data() | void testDate::testSkip_data() | ||

{ | { | ||

| Line 644: | Line 644: | ||

QCOMPARE( val1, val2 ); | QCOMPARE( val1, val2 ); | ||

} | } | ||

</ | </syntaxhighlight> | ||

'''Example 16. Output of unit test showing skipped tests''' | '''Example 16. Output of unit test showing skipped tests''' | ||

< | <pre> | ||

$ ./tutorial4 testSkip -v2 | $ ./tutorial4 testSkip -v2 | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 667: | Line 667: | ||

Totals: 3 passed, 0 failed, 2 skipped | Totals: 3 passed, 0 failed, 2 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

from the verbose output, you can see that the test was run on the first and third rows. The second row wasn't run because of the QSKIP call with a SkipSingle argument. Similarly, the fourth and fifth rows weren't run because the fourth row triggered a QSKIP call with a SkipAll argument. | from the verbose output, you can see that the test was run on the first and third rows. The second row wasn't run because of the QSKIP call with a SkipSingle argument. Similarly, the fourth and fifth rows weren't run because the fourth row triggered a QSKIP call with a SkipAll argument. | ||

| Line 679: | Line 679: | ||

'''Example 17. Unit test showing expected failures''' | '''Example 17. Unit test showing expected failures''' | ||

< | <syntaxhighlight lang="cpp-qt" line="GESHI_NORMAL_LINE_NUMBERS"> | ||

void testDate::testExpectedFail() | void testDate::testExpectedFail() | ||

{ | { | ||

| Line 691: | Line 691: | ||

QCOMPARE( 3, 3 ); | QCOMPARE( 3, 3 ); | ||

} | } | ||

</ | </syntaxhighlight> | ||

'''Example 18. Output of unit test showing expected failures''' | '''Example 18. Output of unit test showing expected failures''' | ||

< | <pre> | ||

$ ./tutorial4/tutorial4 testExpectedFail -v2 | $ ./tutorial4/tutorial4 testExpectedFail -v2 | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 721: | Line 721: | ||

Totals: 3 passed, 0 failed, 0 skipped | Totals: 3 passed, 0 failed, 0 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

As you can see from the verbose output, we expect a failure from each time we do a QCOMPARE( 1, 2);. In the first call to QEXPECT_FAIL, we use the Continue argument, so the rest of the tests will still be run. However in the second call to QEXPECT_FAILwe use the Abort and the test bails at this point. Generally it is better to use Continue unless you have a lot of closely related tests that would each need a QEXPECT_FAIL entry. | As you can see from the verbose output, we expect a failure from each time we do a QCOMPARE( 1, 2);. In the first call to QEXPECT_FAIL, we use the Continue argument, so the rest of the tests will still be run. However in the second call to QEXPECT_FAILwe use the Abort and the test bails at this point. Generally it is better to use Continue unless you have a lot of closely related tests that would each need a QEXPECT_FAIL entry. | ||

| Line 730: | Line 730: | ||

'''Example 19. Unit test showing unexpected pass''' | '''Example 19. Unit test showing unexpected pass''' | ||

< | <syntaxhighlight lang="cpp-qt" line="GESHI_NORMAL_LINE_NUMBERS"> | ||

void testDate::testUnexpectedPass() | void testDate::testUnexpectedPass() | ||

{ | { | ||

| Line 741: | Line 741: | ||

QCOMPARE( 3, 3 ); | QCOMPARE( 3, 3 ); | ||

} | } | ||

</ | </syntaxhighlight> | ||

'''Example 20. Output of unit test showing unexpected pass''' | '''Example 20. Output of unit test showing unexpected pass''' | ||

< | <pre> | ||

$ ./tutorial4/tutorial4 testUnexpectedPass -v2 | $ ./tutorial4/tutorial4 testUnexpectedPass -v2 | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 766: | Line 766: | ||

Totals: 2 passed, 2 failed, 0 skipped | Totals: 2 passed, 2 failed, 0 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

The effect of unexpected passes on the running of the test is controlled by the second argument to QEXPECT_FAIL. If the argument is Continue and the test unexpectedly passes, then the rest of the test function will be run. If the argument is Abort, then the test will stop. | The effect of unexpected passes on the running of the test is controlled by the second argument to QEXPECT_FAIL. If the argument is Continue and the test unexpectedly passes, then the rest of the test function will be run. If the argument is Abort, then the test will stop. | ||

| Line 775: | Line 775: | ||

Example 21. Unit test producing warning and debug messages | Example 21. Unit test producing warning and debug messages | ||

< | <syntaxhighlight lang="cpp-qt" line="GESHI_NORMAL_LINE_NUMBERS"> | ||

void testDate::testQdebug() | void testDate::testQdebug() | ||

{ | { | ||

| Line 782: | Line 782: | ||

qCritical("critical"); | qCritical("critical"); | ||

} | } | ||

</ | </syntaxhighlight> | ||

'''Example 22. Output of unit test producing warning and debug messages''' | '''Example 22. Output of unit test producing warning and debug messages''' | ||

< | <pre> | ||

$ ./tutorial4 testQdebug | $ ./tutorial4 testQdebug | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 797: | Line 797: | ||

Totals: 3 passed, 0 failed, 0 skipped | Totals: 3 passed, 0 failed, 0 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

Note that while this example produces the debug and warning messages within the test function (testQdebug), those messages would normally be propagated up from the code being tested. However the source of the messages does not make any difference to how they are handled. | Note that while this example produces the debug and warning messages within the test function (testQdebug), those messages would normally be propagated up from the code being tested. However the source of the messages does not make any difference to how they are handled. | ||

| Line 808: | Line 808: | ||

'''Example 23. Example of using ignoreMessage''' | '''Example 23. Example of using ignoreMessage''' | ||

< | <syntaxhighlight lang="cpp-qt"> | ||

void testDate::testValidity() | void testDate::testValidity() | ||

{ | { | ||

| Line 820: | Line 820: | ||

qWarning("validiti warning"); | qWarning("validiti warning"); | ||

} | } | ||

</ | </syntaxhighlight> | ||

'''Example 24. Output of ignoreMessage example''' | '''Example 24. Output of ignoreMessage example''' | ||

< | <pre> | ||

$ ./tutorial4 testValidity testValiditi | $ ./tutorial4 testValidity testValiditi | ||

********* Start testing of testDate ********* | ********* Start testing of testDate ********* | ||

| Line 836: | Line 836: | ||

Totals: 3 passed, 1 failed, 0 skipped | Totals: 3 passed, 1 failed, 0 skipped | ||

********* Finished testing of testDate ********* | ********* Finished testing of testDate ********* | ||

</ | </pre> | ||

Note that the warning message in testDate::testValidity has been "swallowed" by | Note that the warning message in testDate::testValidity has been "swallowed" by thecall to ignoreMessage. | ||

By contrast, the warning message in testDate::testValiditi still causes a warning to be logged, because the ignoreMessage call does not match the text in the warning message. In addition, because a we expected a particular warning message and it wasn't received, the testDate::testValiditi test function fails. | By contrast, the warning message in testDate::testValiditi still causes a warning to be logged, because the ignoreMessage call does not match the text in the warning message. In addition, because a we expected a particular warning message and it wasn't received, the testDate::testValiditi test function fails. | ||

| Line 846: | Line 846: | ||

An important part of Qt programming is the use of signals and slots. This section covers the support for testing of these features. | An important part of Qt programming is the use of signals and slots. This section covers the support for testing of these features. | ||

{{Tip|'''Note:''' If you are not familiar with Qt signals and slots, you probably should review the introduction to this feature provided in your Qt documentation. It is also available at http://doc. | {{Tip|'''Note:''' If you are not familiar with Qt signals and slots, you probably should review the introduction to this feature provided in your Qt documentation. It is also available at http://doc.qt.io/qt-5/signalsandslots.html.}} | ||

===Testing slots=== | ===Testing slots=== | ||

| Line 853: | Line 853: | ||

'''Example 25. QLabel test code, showing testing of a couple of slots''' | '''Example 25. QLabel test code, showing testing of a couple of slots''' | ||

< | <syntaxhighlight lang="cpp-qt" line="GESHI_NORMAL_LINE_NUMBERS"> | ||

#include <QtTest> | #include <QtTest> | ||

#include <QtCore> | #include <QtCore> | ||

| Line 881: | Line 881: | ||

QTEST_MAIN(testLabel) | QTEST_MAIN(testLabel) | ||

#include "tutorial5.moc" | #include "tutorial5.moc" | ||

</ | </syntaxhighlight> | ||

===Testing signals=== | ===Testing signals=== | ||

Testing of signals is a little more difficult than testing of slots, however Qt offers a very useful class called QSignalSpy that helps a lot. | Testing of signals is a little more difficult than testing of slots, however, Qt offers a very useful class called QSignalSpy that helps a lot. | ||

{{qt|QSignalSpy}} is a class provided with Qt that allows you to record the signals that have been emitted from a particular QObject subclass object. You can then check that the right number of signals have been emitted | {{qt|QSignalSpy}} is a class provided with Qt that allows you to record the signals that have been emitted from a particular QObject subclass object. You can then check that the right number of signals have been emitted and that the right kind of signals was emitted. You can find more information on the QSignalSpy class in your Qt documentation. | ||

An example of how you can use QSignalSpy to test a class that has signals is shown below. | An example of how you can use QSignalSpy to test a class that has signals is shown below. | ||

| Line 892: | Line 892: | ||

'''Example 26. QCheckBox test code, showing testing of signals''' | '''Example 26. QCheckBox test code, showing testing of signals''' | ||

< | <syntaxhighlight lang="cpp-qt" line="GESHI_NORMAL_LINE_NUMBERS"> | ||

#include <QtTest> | #include <QtTest> | ||

#include <QtCore> | #include <QtCore> | ||

| Line 907: | Line 907: | ||

{ | { | ||

// You don't need to use an object created with "new" for | // You don't need to use an object created with "new" for | ||

// QSignalSpy, I just needed | // QSignalSpy, I just needed it in this case to test the emission | ||

// of a destroyed() signal. | // of a destroyed() signal. | ||

QCheckBox *xbox = new QCheckBox; | QCheckBox *xbox = new QCheckBox; | ||

| Line 978: | Line 978: | ||

QTEST_MAIN(testCheckBox) | QTEST_MAIN(testCheckBox) | ||

#include "tutorial5a.moc" | #include "tutorial5a.moc" | ||

</ | </syntaxhighlight> | ||

The first | The first 13 lines are essentially unchanged from previous examples that we've seen. Line 17 creates the object that will be tested - as noted in the comments in lines 14-16, the only reason that I'm creating it with new is because I need to delete it in line 59 to cause the destroyed() signal to be emitted. | ||

Line | Line 23 sets up the first of our two QSignalSpy instances. The one in line 23 monitors the stateChanged(int) signal, and the one in line 34 monitors the destroyed() signal. If you get the name or signature of the signal wrong (for example, if you use stateChanged() instead of stateChanged(int)), then this will not be caught at compile time, but will result in a runtime failure. You can test if things were set up correctly using the isValid(), as shown in lines 27 and 35. | ||

As shown in line | As shown in line 40, there is no reason why you cannot test normal methods, signals and slots in the same test. | ||

Line | Line 46 changes the state of the object under test, which is supposed to result in a stateChanged(int) signal being emitted. Line 47 checks that the number of signals increases from zero to one. The process is repeated in lines 49 and 50, and again in lines 52 and 53. | ||

Line | Line 56 deletes the object under test, and line 59 tests that the destroyed() signal has been emitted. | ||

For signals that have arguments (such as our stateChanged(int) signal), you may also wish to check that the arguments were correct. You can do this by looking at the list of signal arguments. Exactly how you do this is fairly flexible, however for simple tests like the one in the example, you can manually work through the list using takeFirst() and check that each argument is correct. This is shown in line | For signals that have arguments (such as our stateChanged(int) signal), you may also wish to check that the arguments were correct. You can do this by looking at the list of signal arguments. Exactly how you do this is fairly flexible, however for simple tests like the one in the example, you can manually work through the list using takeFirst() and check that each argument is correct. This is shown in line 65, 68 and 70 for the first signal. The same approach is shown in lines 73, 75 and 76 for the second signal, and the in lines 79 to 81 for the third signal. For a more complex set of tests, you may wish to apply some data driven techniques. | ||

{{Tip|'''Note:''' You should be aware that, for some class implementations, you may need to return control to the event loop to have signals emitted. If you need this, try using the {{qt|QTest}}::qWait() function.}} | {{Tip|'''Note:''' You should be aware that, for some class implementations, you may need to return control to the event loop to have signals emitted. If you need this, try using the {{qt|QTest}}::qWait() function.}} | ||

| Line 996: | Line 996: | ||

==Tutorial 6: Integrating with CMake== | ==Tutorial 6: Integrating with CMake== | ||

The | The KDE build tool is [http://www.cmake.org CMake], and I assume that you are familiar with the use of CMake. If not, you should review the [[Guidelines and HOWTOs/CMake|CMake Tutorial]] first. | ||

CMake offers quite good support for unit testing, and QTestLib tests can be easily integrated into any CMake build system. | CMake offers quite good support for unit testing, and QTestLib tests can be easily integrated into any CMake build system. | ||

| Line 1,007: | Line 1,007: | ||

In some configurations, there may be a build system option to turn on (or off) the compilation of tests. At this stage, you have to enable the '''BUILD_TESTING''' option in KDE4 modules, however this may go away in the near future, as later version of CMake can build the test applications on demand. | In some configurations, there may be a build system option to turn on (or off) the compilation of tests. At this stage, you have to enable the '''BUILD_TESTING''' option in KDE4 modules, however this may go away in the near future, as later version of CMake can build the test applications on demand. | ||

If the tests are still not building, you might want to issue make buildtests in tests directory. | |||

=== Adding Tests === | === Adding Tests === | ||

By convention tests are put in the '''autotests''' directory. See [https://invent.kde.org/graphics/okular/-/tree/master/autotests Okular] for an example. | |||

You add a single test to the list of all tests that can be run by using | You add a single test to the list of all tests that can be run by using | ||

''' | '''ecm_add_test''', which looks like this in its simplest form: | ||

include(ECMAddTests) | |||

include_directories(AFTER "${CMAKE_CURRENT_SOURCE_DIR}/..") | |||

ecm_add_test( | |||

tutorial1.cpp | |||

LINK_LIBRARIES Qt5::Test | |||

) | |||

* The first argument is the filename. | |||

* There are named arguments for other options. | |||

If you have several tests with the same options, you can add them all by using the plural variant '''ecm_add_tests'''. | |||

ecm_add_tests( | |||

tutorial1.cpp tutorial2.cpp | |||

LINK_LIBRARIES Qt5::Test | |||

) | |||

See [https://api.kde.org/ecm/module/ECMAddTests.html ECM Documentation] for details. | |||

Note that these commands do nothing if '''ENABLE_TESTING()''' has not been run. | |||

=== Testing with Akonaki === | |||

Some tests require an Akonadi server be up and running, so the test must launch a server process (isolated from any existing environment), load in test data, and shut the server down after the testing finishes. You can use the [https://techbase.kde.org/KDE_PIM/Akonadi/Testing Akonadi Testrunner] to do this; consult that document for information on configuring the test runner and test data. | |||

The KF5AkonadiMacros.cmake file in the Akonadi repository provides an '''add_akonadi_isolated_test''' function to add tests to the build. | |||

add_akonadi_isolated_test( | |||

SOURCE sometest.cpp | |||

LINK_LIBRARIES Qt5::Test | |||

) | |||

=== KDE4 CMake Recipe for QTestLib === | === KDE4 CMake Recipe for QTestLib === | ||

| Line 1,026: | Line 1,052: | ||

If you are working in a KDE4 environment, then it is pretty easy to get CMake set up to build and run a test on demand. | If you are working in a KDE4 environment, then it is pretty easy to get CMake set up to build and run a test on demand. | ||

< | <pre> | ||

set( kwhatevertest_SRCS | cmake_minimum_required(VERSION 2.8) | ||

FIND_PACKAGE ( KDE4 REQUIRED ) | |||

FIND_PACKAGE ( Qt4 REQUIRED QT_USE_QT* ) | |||

INCLUDE( ${QT_USE_FILE} ) | |||

include(KDE4Defaults) | |||

set( kwhatevertest_SRCS kwhatevertest.cpp ) | |||

kde4_add_unit_test( kwhatevertest | kde4_add_unit_test( kwhatevertest | ||

| Line 1,037: | Line 1,071: | ||

${KDE4_KDECORE_LIBS} | ${KDE4_KDECORE_LIBS} | ||

${QT_QTTEST_LIBRARY} | ${QT_QTTEST_LIBRARY} | ||

${KDE4_KDEUI_LIBS} | |||

) | ) | ||

</ | </pre> | ||

You are meant to replace "kwhatevertest" with the name of your test application. The target_link_libraries() line will need to contain whatever libraries are needed for the feature you are testing, so if it is a GUI feature, you'll likely need to use "${KDE4_KDEUI_LIBS}. | You are meant to replace "kwhatevertest" with the name of your test application. The target_link_libraries() line will need to contain whatever libraries are needed for the feature you are testing, so if it is a GUI feature, you'll likely need to use "${KDE4_KDEUI_LIBS}. | ||

| Line 1,047: | Line 1,082: | ||

This is equivalent to running the "ctest" executable with no arguments. If you want finer grained control over which tests are run or the output format, you can use additional arguments. These are explained in the ctest man page ("man ctest" on a *nix system, or run "ctest --help-full"). | This is equivalent to running the "ctest" executable with no arguments. If you want finer grained control over which tests are run or the output format, you can use additional arguments. These are explained in the ctest man page ("man ctest" on a *nix system, or run "ctest --help-full"). | ||

To run a single test, use '''./tutorial1.shell''' rather than just '''./tutorial1''', this will make it use the locally-built version of the shared libraries you're testing, rather than the installed ones. | |||

Some tests are written so that the expected local is English and fail if it is not. | |||

If your local is not English you case use for instance `LANG=C ctest` or `LANG=C make` to force English when running the test. | |||

=== Further Reading === | === Further Reading === | ||

| Line 1,052: | Line 1,092: | ||

Chapter 8 of the [http://www.kitware.com/products/cmakebook.html CMake Book] provides a detailed description of how to do testing with CMake. Also see Appendix B for more on CTest and the special commands you can use. | Chapter 8 of the [http://www.kitware.com/products/cmakebook.html CMake Book] provides a detailed description of how to do testing with CMake. Also see Appendix B for more on CTest and the special commands you can use. | ||

Various sections of the CMake Wiki, especially [ | Various sections of the CMake Wiki, especially [https://gitlab.kitware.com/cmake/community/wikis/doc/ctest/Testing-With-CTest CTest testing] | ||

==Tutorial 7: Integrating with qmake== | ==Tutorial 7: Integrating with qmake== | ||

| Line 1,072: | Line 1,112: | ||

===Static tests=== | ===Static tests=== | ||

As noted in the introduction, unit tests are dynamic tests - they exercise the compiled code. Static tests are slightly different - they look for problems in source code, rather than making sure that the object code runs correctly. | As noted in the introduction, unit tests are dynamic tests - they exercise the compiled code. Static tests are slightly different - they look for problems in the source code, rather than making sure that the object code runs correctly. | ||

Static test tools tend to identify completely different types of problems to unit tests, and you should seek to use them both. | Static test tools tend to identify completely different types of problems to unit tests, and you should seek to use them both. | ||

| Line 1,078: | Line 1,118: | ||

For more information on using static tests, see [[../Code Checking|the Code Checking tutorial]]. | For more information on using static tests, see [[../Code Checking|the Code Checking tutorial]]. | ||

===Coverage tools and | ===Coverage tools and CI=== | ||

Add this option in the configuration of your project's CI build. | |||

# http://quickgit.kde.org/?p=websites%2Fbuild-kde-org. | |||

# git clone kde:websites/build-kde-org | |||

[DEFAULT] | |||

configureExtraArgs=-DBUILD_COVERAGE=ON | |||

=== GUI application testing - Squish and KDExecutor === | === GUI application testing - Squish and KDExecutor === | ||

[ | [https://www.froglogic.com/squish/ Squish] by [https://www.froglogic.com froglogic] and [http://www.kdab.net/?page=products&sub=kdexecutor KDExecutor for Qt3/KDE3] by [https://www.kdab.net Klarälvdalens Datakonsult (KDAB)] are commercial tools that facilitate GUI testing. | ||

Latest revision as of 15:45, 8 July 2021

- Author: Brad Hards, Sigma Bravo Pty Limited

Abstract

This article provides guidance on writing unittests for software based on Qt and KDE frameworks. It uses the QtTestLib framework provided starting with Qt 4.1. It provides an introduction to the ideas behind unit testing, tutorial material on the QtTestLib framework, and suggestions for getting the most value for your effort.

About Unit Testing

A unit test is a test that checks the functionality, behaviour and correctness of a single software component. In Qt code unit tests are almost always used to test a single C++ class (although testing a macro or C function is also possible).

Unit tests are a key part of Test Driven Development, however they are useful for all software development processes. It is not essential that all of the code is covered by unit tests (although that is obviously very desirable!). Even a single test is a useful step to improving code quality.

Note that unit tests are dynamic tests (i.e. they run, using the compiled code) rather than static analysis tests (which operate on the source or some intermediate representation).

Even if they don't call them "unit tests", most programmers have written some "throwaway" code that they use to check an implementation. If that code was cleaned up a little, and built into the development system, then it could be used over and over to check that the implementation is still OK. To make that work a little easier, we can use test frameworks.

Note that it is sometimes tempting to treat the unit test as a pure verification tool. While it is true that unit tests do help to ensure correct functionality and behaviour, they also assist with other aspects of code quality. Writing a unit test requires a slightly different approach to coding up a class, and thinking about what inputs need to be tested can help to identify logic flaws in the code (even before the tests get run). In addition, the need to make the code testable is a very useful driver to ensure that classes do not suffer from close coupling.

Anyway, enough of the conceptual stuff - lets talk about a specific tool that can reduce some of the effort and let us get on with the job.

About QtTestLib

QtTestlib is a lightweight testing library developed by the Qt Project and released under the LGPL (a commercial version is also available, for those who need alternative licensing). It is written in C++, and is cross-platform. It is provided as part of the tools included in Qt.

In addition to normal unit testing capabilities, QtTestLib also offers basic GUI testing, based on sending QEvents. This allows you to test GUI widgets, but is not generally suitable for testing full applications.

Each testcase is a standalone test application. Unlike CppUnit or JUnit, there is no Runner type class. Instead, each testcase is an executable which is simply run.

Tutorial 1: A simple test of a date class

In this tutorial, we will build a simple test for a class that represents a date, using QtTestLib as the test framework. To avoid too much detail on how the date class works, we'll just use the QDate class that comes with Qt. In a normal unittest, you would more likely be testing code that you've written yourself.

The code below is the entire testcase.

Example 1. QDate test code

#include <QTest>

#include <QDate>

class testDate: public QObject

{

Q_OBJECT

private slots:

void testValidity();

void testMonth();

};

void testDate::testValidity()

{

// 11 March 1967

QDate date( 1967, 3, 11 );

QVERIFY( date.isValid() );

}

void testDate::testMonth()

{

// 11 March 1967

QDate date;

date.setDate( 1967, 3, 11 );

QCOMPARE( date.month(), 3 );

QCOMPARE( QDate::longMonthName(date.month()),

QString("March") );

}

QTEST_MAIN(testDate)

#include "tutorial1.moc"Save as autotests/tutorial1.cpp in your project's autotests directory following the example of Okular

Stepping through the code, the first line imports the header files for the QtTest namespace. The second line imports the headers for the QDate class. Lines 4 to 10 give us the test class, testData. Note that testDate inherits from QObject and has the Q_OBJECT macro - QtTestLib requires specific Qt functionality that is present in QObject.

Lines 12 to 17 provide our first test, which checks that a date is valid. Note the use of the QVERIFY macro, which checks that the condition is true. So if date.isValid() returns true, then the test will pass, otherwise the test will fail. QVERIFY is similar to ASSERT in other test suites.

Similarly, lines 19 to 27 provide another test, which checks a setter, and a couple of accessor routines. In this case, we are using QCOMPARE, which checks that the conditions are equal. So if date.month() returns 3, then that part of that test will pass, otherwise the test will fail.

In a later tutorial we will see how to work around problems that this behaviour can cause.

Line 30 uses the QTEST_MAIN which creates an entry point routine for us, with appropriate calls to invoke the testDate unit test class.

Line 31 includes the Meta-Object compiler output, so we can make use of our QObject functionality.

The qmake project file that corresponds to that code is shown below. You would then use qmake to turn this into a Makefile and then compile it with make.

Example 2. QDate unit test project

CONFIG += qtestlib TEMPLATE = app TARGET += DEPENDPATH += . INCLUDEPATH += . # Input SOURCES += tutorial1.cpp

Save as tutorial1.pro

This is a fairly normal project file, except for the addition of the CONFIG += qtestlib. This adds the right header and library setup to the Makefile.

Create an empty file called tutorial1.h and compile with qmake; make The output looks like the following:

Example 3. QDate unit test output

$ ./tutorial1 ********* Start testing of testDate ********* Config: Using QTest library 4.1.0, Qt 4.1.0-snapshot-20051003 PASS : testDate::initTestCase() PASS : testDate::testValidity() PASS : testDate::testMonth() PASS : testDate::cleanupTestCase() Totals: 4 passed, 0 failed, 0 skipped ********* Finished testing of testDate *********

Looking at the output above, you can see that the output includes the version of the test library and Qt itself, and then the status of each test that is run. In addition to the testValidity and testMonth tests that we defined, there is also a setup routine (initTestCase) and a teardown routine (cleanupTestCase) that can be used to do additional configuration if required.

Failing tests

If we had made an error in either the production code or the unit test code, then the results would show an error. An example is shown below:

Example 4. QDate unit test output showing failure

$ ./tutorial1

********* Start testing of testDate *********

Config: Using QTest library 4.1.0, Qt 4.1.0-snapshot-20051003

PASS : testDate::initTestCase()

PASS : testDate::testValidity()

FAIL! : testDate::testMonth() Compared values are not the same

Actual (date.month()): 4

Expected (3): 3

Loc: [tutorial1.cpp(25)]

PASS : testDate::cleanupTestCase()

Totals: 3 passed, 1 failed, 0 skipped

********* Finished testing of testDate *********

Running selected tests

When the number of test functions increases, and some of the functions take a long time to run, it can be useful to only run a selected function. For example, if you only want to run the testMonth function, then you just specify that on the command line, as shown below:

Example 5. QDate unit test output - selected function

$ ./tutorial1 testValidity ********* Start testing of testDate ********* Config: Using QTest library 4.1.0, Qt 4.1.0-snapshot-20051003 PASS : testDate::initTestCase() PASS : testDate::testValidity() PASS : testDate::cleanupTestCase() Totals: 3 passed, 0 failed, 0 skipped ********* Finished testing of testDate *********

Note that the initTestCase and cleanupTestCase routines are always run, so that any necessary setup and cleanup will still be done.

You can get a list of the available functions by passing the -functions option, as shown below:

Example 6. QDate unit test output - listing functions

$ ./tutorial1 -functions testValidity() testMonth()

Verbose output options

You can get more verbose output by using the -v1, -v2 and -vs options. -v1 produces a message on entering each test function. I found this is useful when it looks like a test is hanging. This is shown below:

Example 7. QDate unit test output - verbose output

$ ./tutorial1 -v1 ********* Start testing of testDate ********* Config: Using QTest library 4.1.0, Qt 4.1.0-snapshot-20051003 INFO : testDate::initTestCase() entering PASS : testDate::initTestCase() INFO : testDate::testValidity() entering PASS : testDate::testValidity() INFO : testDate::testMonth() entering PASS : testDate::testMonth() INFO : testDate::cleanupTestCase() entering PASS : testDate::cleanupTestCase() Totals: 4 passed, 0 failed, 0 skipped ********* Finished testing of testDate *********

The -v2 option shows each QVERIFY, QCOMPARE and QTEST, as well as the message on entering each test function. I found this useful for verifying that a particular step is being run. This is shown below:

'Example 8. QDate unit test output - more verbose output

$ ./tutorial1 -v2

********* Start testing of testDate *********

Config: Using QTest library 4.1.0, Qt 4.1.0-snapshot-20051003

INFO : testDate::initTestCase() entering

PASS : testDate::initTestCase()

INFO : testDate::testValidity() entering

INFO : testDate::testValidity() QVERIFY(date.isValid())

Loc: [tutorial1.cpp(17)]

PASS : testDate::testValidity()

INFO : testDate::testMonth() entering

INFO : testDate::testMonth() COMPARE()

Loc: [tutorial1.cpp(25)]

INFO : testDate::testMonth() COMPARE()

Loc: [tutorial1.cpp(27)]

PASS : testDate::testMonth()

INFO : testDate::cleanupTestCase() entering

PASS : testDate::cleanupTestCase()

Totals: 4 passed, 0 failed, 0 skipped

********* Finished testing of testDate *********

The -vs option shows each signal that is emitted. In our example, there are no signals, so -vs has no effect. Getting a list of signals is useful for debugging failing tests, especially GUI tests which we will see in the third tutorial.

Output to a file

If you want to output the results of your testing to a file, you can use the -o filename, where you replace filename with the name of the file you want to save output to.

Tutorial 2: Data driven testing of a date class

In the previous example, we looked at how we can test a date class. If we decided that we really needed to test a lot more dates, then we'd be cutting and pasting a lot of code. If we subsequently changed the name of a function, then it has to be changed in a lot of places. As an alternative to introducing these types of maintenance problems into our tests, QtTestLib offers support for data driven testing.

The easiest way to understand data driven testing is by an example, as shown below:

Example 9. QDate test code, data driven version

#include <QtTest>

#include <QtCore>

class testDate: public QObject

{

Q_OBJECT

private slots:

void testValidity();

void testMonth_data();

void testMonth();

};

void testDate::testValidity()

{

// 12 March 1967

QDate date( 1967, 3, 12 );

QVERIFY( date.isValid() );

}

void testDate::testMonth_data()

{

QTest::addColumn<int>("year"); // the year we are testing

QTest::addColumn<int>("month"); // the month we are testing

QTest::addColumn<int>("day"); // the day we are testing

QTest::addColumn<QString>("monthName"); // the name of the month

QTest::newRow("1967/3/11") << 1967 << 3 << 11 << QString("March");

QTest::newRow("1966/1/10") << 1966 << 1 << 10 << QString("January");

QTest::newRow("1999/9/19") << 1999 << 9 << 19 << QString("September");

// more rows of dates can go in here...

}

void testDate::testMonth()

{

QFETCH(int, year);

QFETCH(int, month);

QFETCH(int, day);

QFETCH(QString, monthName);

QDate date;

date.setDate( year, month, day);

QCOMPARE( date.month(), month );

QCOMPARE( QDate::longMonthName(date.month()), monthName );

}

QTEST_MAIN(testDate)

#include "tutorial2.moc"As you can see, we've introduced a new method - testMonth_data, and moved the specific test date out of testMonth. We've had to add some more code (which will be explained soon), but the result is a separation of the data we are testing, and the code we are using to test it. The names of the functions are important - you must use the _data suffix for the data setup routine, and the first part of the data setup routine must match the name of the driver routine.

It is useful to visualise the data as being a table, where the columns are the various data values required for a single run through the driver, and the rows are different runs. In our example, there are four columns (three integers, one for each part of the date; and one QString ), added in lines 23 through 30. The addColumn template obviously requires the type of variable to be added, and also requires a variable name argument. We then add as many rows as required using the newRow function, as shown in lines 23 through 26. The string argument to newRow is a label, which is handy for determining what is going on with failing tests, but doesn't have any effect on the test itself.

To use the data, we simply use QFETCH to obtain the appropriate data from each row. The arguments to QFETCH are the type of the variable to fetch, and the name of the column (which is also the local name of the variable it gets fetched into). You can then use this data in a QCOMPARE or QVERIFY check. The code is run for each row, which you can see below:

Example 10. Results of data driven testing, showing QFETCH

$ ./tutorial2 -v2 ********* Start testing of testDate ********* Config: Using QTest library 4.1.0, Qt 4.1.0-snapshot-20051020 INFO : testDate::initTestCase() entering PASS : testDate::initTestCase() INFO : testDate::testValidity() entering INFO : testDate::testValidity() QVERIFY(date.isValid()) Loc: [tutorial2.cpp(19)] PASS : testDate::testValidity() INFO : testDate::testMonth() entering INFO : testDate::testMonth(1967/3/11) COMPARE() Loc: [tutorial2.cpp(44)] INFO : testDate::testMonth(1967/3/11) COMPARE() Loc: [tutorial2.cpp(45)] INFO : testDate::testMonth(1966/1/10) COMPARE() Loc: [tutorial2.cpp(44)] INFO : testDate::testMonth(1966/1/10) COMPARE() Loc: [tutorial2.cpp(45)] INFO : testDate::testMonth(1999/9/19) COMPARE() Loc: [tutorial2.cpp(44)] INFO : testDate::testMonth(1999/9/19) COMPARE() Loc: [tutorial2.cpp(45)] PASS : testDate::testMonth() INFO : testDate::cleanupTestCase() entering PASS : testDate::cleanupTestCase() Totals: 4 passed, 0 failed, 0 skipped ********* Finished testing of testDate *********

The QTEST macro

As an alternative to using QFETCH and QCOMPARE, you may be able to use the QTEST macro instead. QTEST takes two arguments, and if one is a string, it looks up that string as an argument in the current row. You can see how this can be used below, which is equivalent to the testMonth() code in the previous example.

Example 11. QDate test code, data driven version using QTEST

void testDate::testMonth()

{

QFETCH(int, year);

QFETCH(int, month);

QFETCH(int, day);

QDate date;

date.setDate( year, month, day);

QCOMPARE( date.month(), month );

QTEST( QDate::longMonthName(date.month()), "monthName" );

}In the example above, note that monthName is enclosed in quotes, and we no longer have a QFETCH call for monthName.

The other QCOMPARE could also have been converted to use QTEST, however this would be less efficient, because we already needed to use QFETCH to get month for the setDate in the line above.

Running selected tests with selected data

In the previous tutorial, we saw how to run a specific test by specifying the name of the test as a command line argument. In data driven testing, you can select which data you want the test run with, by adding a colon and the label for the data row. For example, if we just want to run the testMonth test for the first row, we would use

./tutorial2 -v2 testMonth:1967/3/11

. The result of this is shown below.

Example 12. QDate unit test output - selected function and data

$ ./tutorial2 -v2 testMonth:1967/3/11 ********* Start testing of testDate ********* Config: Using QTest library 4.1.0, Qt 4.1.0-snapshot-20051020 INFO : testDate::initTestCase() entering PASS : testDate::initTestCase() INFO : testDate::testMonth() entering INFO : testDate::testMonth(1967/3/11) COMPARE() Loc: [tutorial2.cpp(44)] INFO : testDate::testMonth(1967/3/11) COMPARE() Loc: [tutorial2.cpp(45)] PASS : testDate::testMonth() INFO : testDate::cleanupTestCase() entering PASS : testDate::cleanupTestCase() Totals: 3 passed, 0 failed, 0 skipped ********* Finished testing of testDate *********

Tutorial 3: Testing Graphical User Interfaces

In the previous two tutorials, we've tested a date management class. This is an pretty typical use of unit testing. However Qt and KDE applications will make use graphical classes that take user input (typically from a keyboard and mouse). QtTestLib offers support for testing these classes, which we'll see in this tutorial.

Again, we'll use an existing class as our test environment, and again it will be date related - the standard Qt QDateEdit class. For those not familiar with this class, it is a simple date entry widget (although with some powerful back end capabilities). A picture of the widget is shown below.

The way QtTestLib provides GUI testing is by injecting QInputEvent events. To the application, these input events appear the same as normal key press/release and mouse clicks/drags. However the mouse and keyboard are unaffected, so that you can continue to use the machine normally while tests are being run.

An example of how you can use the GUI functionality of QtTestLib is shown below.

Example 13. QDateEdit test code

#include <QtTest>

#include <QtCore>

#include <QtGui>

Q_DECLARE_METATYPE(QDate)

class testDateEdit: public QObject

{

Q_OBJECT

private slots:

void testChanges();

void testValidator_data();

void testValidator();

};

void testDateEdit::testChanges()

{

// 11 March 1967

QDate date( 1967, 3, 11 );

QDateEdit dateEdit( date );

// up-arrow should increase day by one

QTest::keyClick( &dateEdit, Qt::Key_Up );

QCOMPARE( dateEdit.date(), date.addDays(1) );

// we click twice on the "reduce" arrow at the bottom RH corner

// first we need the widget size to know where to click

QSize editWidgetSize = dateEdit.size();

QPoint clickPoint(editWidgetSize.rwidth()-2, editWidgetSize.rheight()-2);

// issue two clicks

QTest::mouseClick( &dateEdit, Qt::LeftButton, 0, clickPoint);

QTest::mouseClick( &dateEdit, Qt::LeftButton, 0, clickPoint);

// and we should have decreased day by two (one less than original)

QCOMPARE( dateEdit.date(), date.addDays(-1) );

QTest::keyClicks( &dateEdit, "25122005" );

QCOMPARE( dateEdit.date(), QDate( 2005, 12, 25 ) );

QTest::keyClick( &dateEdit, Qt::Key_Tab, Qt::ShiftModifier );

QTest::keyClicks( &dateEdit, "08" );

QCOMPARE( dateEdit.date(), QDate( 2005, 8, 25 ) );

}

void testDateEdit::testValidator_data()

{

qRegisterMetaType<QDate>("QDate");

QTest::addColumn<QDate>( "initialDate" );

QTest::addColumn<QString>( "keyclicks" );

QTest::addColumn<QDate>( "finalDate" );

QTest::newRow( "1968/4/12" ) << QDate( 1967, 3, 11 )

<< QString( "12041968" )

<< QDate( 1968, 4, 12 );

QTest::newRow( "1967/3/14" ) << QDate( 1967, 3, 11 )

<< QString( "140abcdef[" )

<< QDate( 1967, 3, 14 );

// more rows can go in here

}

void testDateEdit::testValidator()

{

QFETCH( QDate, initialDate );

QFETCH( QString, keyclicks );

QFETCH( QDate, finalDate );

QDateEdit dateEdit( initialDate );

// this next line is just to start editing

QTest::keyClick( &dateEdit, Qt::Key_Enter );

QTest::keyClicks( &dateEdit, keyclicks );

QCOMPARE( dateEdit.date(), finalDate );

}

QTEST_MAIN(testDateEdit)

#include "tutorial3.moc"Much of this code is common with previous examples, so I'll focus on the new elements and the more important changes as we work through the code line-by-line.

Lines 1 to 3 import the various Qt declarations, as before.

Line 4 is a macro that is required for the data-driven part of this test, which I'll come to soon.

Lines 6 to 13 declare the test class - while the names have changed, it is pretty similar to the previous example. Note the testValidator and testValidator_data functions - we will be using data driven testing again in this example.

Our first real test starts in line 15. Line 18 creates a QDate, and line 19 uses that date as the initial value for a QDateEdit widget.

Lines 22 and 23 show how we can test what happens when we press the up-arrow key. The QTest::keyClick function takes a pointer to a widget, and a symbolic key name (a char or a Qt::Key). At line 23, we check that the effect of that event was to increment the date by a day. The QTest:keyClick function also takes an optional keyboard modifier (such as Qt::ShiftModifier for the shift key) and an optional delay value (in milliseconds). As an alternative to using QTest::keyClick, you can use QTest::keyPress and QTest::keyRelease to construct more complex keyboard sequences.

Lines 27 to 33 show a similar test to the previous one, but in this case we are simulating a mouse click. We need to click in the lower right hand part of the widget (to hit the decrement arrow - see Figure 1), and that requires knowing how large the widget is. So lines 27 and 28 calculate the correct point based off the size of the widget. Line 30 (and the identical line 31) simulates clicking with the left-hand mouse button at the calculated point. The arguments to Qt::mouseClick are:

- a pointer to the widget that the click event should be sent to.

- the mouse button that is being clicked.

- an optional keyboard modifier (such a Qt::ShiftModifier), or 0 for no modifiers.

- an optional click point - this defaults to the middle of the widget if not specified.

- an optional mouse delay.

In addition to QTest::mouseClick, there is also QTest::mousePress, QTest::mouseRelease, QTest::mouseDClick (providing double-click) and QTest::mouseMove. The first three are used in the same way as QTest::mouseClick. The last takes a point to move the mouse to. You can use these functions in combination to simulate dragging with the mouse.

Lines 35 and 36 show another approach to keyboard entry, using the QTest::keyClicks. Where QTest::keyClick sends a single key press, QTest::keyClicks takes a QString (or something equivalent, in line 35 a character array) that represents a sequence of key clicks to send. The other arguments are the same.

Lines 38 to 40 show how you may need to use a combination of functions. After we've entered a new date in line 35, the cursor is at the end of the widget. At line 38, we use a Shift-Tab combination to move the cursor back to the month value. Then at line 39 we enter a new month value. Of course we could have used individual calls to QTest::keyClick, however that wouldn't have been as clear, and would also have required more code.

Data-driven GUI testing

Lines 61 to 72 show a data-driven test - in this case we are checking that the validator on QDateEdit is performing as expected. This is a case where data-driven testing can really help to ensure that things are working the way they should.

At lines 63 to 65, we fetch in an initial value, a series of key-clicks, and an expected result. These are the columns that are set up in lines 47 to 49. However note that we are now pulling in a QDate, where in previous examples we used three integers and then build the QDate from those. However QDate isn't a registered type for QMetaType, and so we need to register it before we can use it in our data-driven testing. This is done using the Q_DECLARE_METATYPE macro in line 4 and the qRegisterMetaType function in line 45.

Lines 51 to 57 add in a couple of sample rows. Lines 51 to 53 represent a case where the input is valid, and lines 55 to 57 are a case where the input is only partly valid (the day part). A real test will obviously contain far more combinations than this.

Those test rows are actually tested in lines 67 to 71. We construct the QDateEdit widget in line 67, using the initial value. We then send an Enter key click in line 69, which is required to get the widget into edit mode. At line 70 we simulate the data entry, and at line 71 we check whether the results are what was expected.

Lines 74 and 75 are the same as we've seen in previous examples.

Re-using test elements

If you are re-using a set of events a number of times, then it may be an advantage to build a list of events, and then just replay them. This can improve maintainability and clarity of a set of tests, especially for mouse movements.