KDE PIM/Akonadi Next: Difference between revisions

Cmollekopf (talk | contribs) |

(Outdated) |

||

| (31 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

'' | {{Warning|This page is outdated. What has been tentatively called Akonadi Next is now 'Sink' and part of [https://kube-project.com/ Kube]. Visit https://kube-sink.readthedocs.io/en/latest/ for the latest Sink documentation.}} | ||

This is an experimental attempt at building a successor to Akonadi 1. The source code repository is currently on git.kde.org under scratch/aseigo/akonadinext | |||

==Current Discussion Points== | |||

* storage read API | |||

* data scheme | |||

==Terminology== | |||

Consistent, agreed-upon terminology is key to being able to work together efficiently on a complex software project such as this, particularly as we are building on the earlier Akonadi efforts which itself has established terminology. You can find the current glossary on the [[KDE_PIM/Akonadi_Next/Terminology|Terminology page]]. | |||

It is recommended to familiarize yourself with the terms before going further into the design of Akonadi-next as it will make things clearer for you and easier to ask the questions you have in a way that others will understand immediately. | |||

== Design == | == Design == | ||

* [[KDE_PIM/Akonadi_Next/DesignGoals|Design Goals]] | |||

* [[KDE_PIM/Akonadi_Next/Overview|Overview]] | |||

* [[KDE_PIM/Akonadi_Next/Client_API|Client API]] | * [[KDE_PIM/Akonadi_Next/Client_API|Client API]] | ||

* [[KDE_PIM/Akonadi_Next/ | * [[KDE_PIM/Akonadi_Next/Resource|Resource]] | ||

* [[KDE_PIM/Akonadi_Next/Store|Store]] | |||

* [[KDE_PIM/Akonadi_Next/Logging|Logging]] | |||

* [[KDE_PIM/Akonadi_Next/Current_Akonadi|Current Akonadi]] | * [[KDE_PIM/Akonadi_Next/Current_Akonadi|Current Akonadi]] | ||

* [[KDE_PIM/Akonadi_Next/Legacy_Akonadi_Compatibility_Layer|Legacy Akonadi Compatibility Layer]] | * [[KDE_PIM/Akonadi_Next/Legacy_Akonadi_Compatibility_Layer|Legacy Akonadi Compatibility Layer]] | ||

* [[KDE_PIM/Akonadi_Next/ | |||

Todo: the design section should eventually be split up into the other sections. | |||

* [[KDE_PIM/Akonadi_Next/Design|Design]] | |||

== Tradeoffs/Design Decisions == | == Tradeoffs/Design Decisions == | ||

| Line 38: | Line 56: | ||

** + decoupling of domain logic from data access | ** + decoupling of domain logic from data access | ||

** + allows to evolve types according to needs (not coupled to specific applicatoins domain types) | ** + allows to evolve types according to needs (not coupled to specific applicatoins domain types) | ||

== Risks == | |||

* key-value store does not perform with large amounts of data | |||

* query performance is not sufficient | |||

* turnaround time for modifications is too high to feel responsive | |||

* design turns out similarly complex as akonadi1 | |||

== Comments/Thoughts/Questions == | == Comments/Thoughts/Questions == | ||

Comments/Thoughts: | Comments/Thoughts: | ||

* Copying of domain objects may defeat mmapped buffers if individual properties get copied (all data is read from disk). We have to make sure only the pointer gets copied. | * Copying of domain objects may defeat mmapped buffers if individual properties get copied (all data is read from disk). We have to make sure only the pointer gets copied. | ||

Open Questions: | Open Questions: | ||

* Resource-to-resource moves (e.g. expiring mail from an imap folder to a local folder) | * Resource-to-resource moves (e.g. expiring mail from an imap folder to a local folder) | ||

** Should the "source" resource become a client to the other resource, and drive the process? This would allow greatest atomicity. It does imply being able to issue cross-resource move commands. | ** Should the "source" resource become a client to the other resource, and drive the process? This would allow greatest atomicity. It does imply being able to issue cross-resource move commands. | ||

* If we use the mmapped buffers to avoid having a property level query API, lazy loading of properties becomes difficult. Currently we specify how much of each item we need using the parts, allowing the lazy loading to fetch missing parts. If we no longer specify what we want we cannot lazy load that part and at the point where the buffer is accessed it's already too late. | * If we use the mmapped buffers to avoid having a property level query API, lazy loading of properties becomes difficult. Currently we specify how much of each item we need using the parts, allowing the lazy loading to fetch missing parts. If we no longer specify what we want we cannot lazy load that part and at the point where the buffer is accessed it's already too late. | ||

* How well can we free up memory with mmapped buffers? | * How well can we free up memory with mmapped buffers? | ||

** 1000 objects are loaded with minimal access (subject) | ** 1000 objects are loaded with minimal access (subject) | ||

| Line 66: | Line 78: | ||

** => I suppose we'd have to munmap the buffer and mmap it again, replacing the pointer. | ** => I suppose we'd have to munmap the buffer and mmap it again, replacing the pointer. | ||

*** This may mean we require a shared wrapper for the pointer so we can replace the pointer everywhere it's used. | *** This may mean we require a shared wrapper for the pointer so we can replace the pointer everywhere it's used. | ||

* Datastreaming: It seems with the current message model it is not possible for the resource to stream large payload's directly to disk. | * Datastreaming: It seems with the current message model it is not possible for the resource to stream large payload's directly to disk. | ||

** As a possible solution a resource could decide to store large payloads externally, stream directly to disk, and then pass a message containing the file location to the system. | ** As a possible solution a resource could decide to store large payloads externally, stream directly to disk, and then pass a message containing the file location to the system. | ||

| Line 90: | Line 101: | ||

* Do we need i.e. a shared Node/Collection basetype for hierarchies, so we can i.e. query the kolab foldertree containing calendars/notebooks/mailfolders. | * Do we need i.e. a shared Node/Collection basetype for hierarchies, so we can i.e. query the kolab foldertree containing calendars/notebooks/mailfolders. | ||

=== | === Christians loose ends === | ||

Words that I'm using here and that we need to find a name for to add to the vocabulary. | |||

* Domain Types = Types as defined by Akonadi2::Domain interface | |||

** | * Resource Types = Types as defined by the resource | ||

* Domain interface is possibly a shitty name for the interface with the domain types? | |||

The | * not a 1:1 mapping between domain/resource types (tags stored as part of mails), needs to be taken into account in queries and various other places. Inside the resource (except facade) we always deal with resource types. | ||

* Since a single modification can lead to multiple changes in storage, we need to wrap those into a transaction | |||

* We're still doing a lot of copying when embedding one flatbuffer in another. I think this could be avoided by using the same builder. | |||

* We should either use QByteArray or std::string for "raw" data. std::string is more standard, but QByteArray is what is traditionally used in qt code and has implicit sharing (which we may, or may not use). | |||

* I would welcome a policy to only use QString if we actually use encoded strings. i.e. property names etc. could all become QByteArrays (or std::strings for that matter) | |||

* we need a crashhandler for the synchronizer, ideally allowing to directly attach gdb (otherwise you have to jump through hoops) | |||

* We need a logging framework (see also wiki for some requirements) | |||

* The job API needs some work, I delegate some to dvratil (who wrote that code) | |||

* the 4k pagesize is extremly expensive for indxes (where we have many but really small values) | |||

* we need a streaming API for large values. We need to stream from the client to/from disk and from the synchronizer to/from disk (1GB attachment) | |||

* The akonadi internal uid and revision should probably never be exposed to clients (unlike the akonadi item id in current akonadi). For many usecases the domain objects UID is what is interesting (which i.e. corresponds to the iCal event UID), and for when we actually need to address a specific record we should probably rather work with handles. This would keep people from doing stupid things like storing those non-stable identifiers (they will be different if we drop the cache and resync) in config files etc. If a solution is required for storable identifiers that reference a specific record rather than the domain object, we could provide a special "stable identifier" that we maintain in a separate database, and that we can invalidate once the record it points to no longer exists. | |||

* The Akonadi2::Console should probably die and be replaced by the logging framework framework. It would then of course be cool to be able to start a "console" tool to monitor what the individual processes are doing in real-time. | |||

* lmdb databases never shrink. queues should therefore be deleted from disk once empty. For indexes and mainstorage always growing should be ok for a start. | |||

* Storage::write should have a version that takes an error handler, as usual | |||

== Useful Resources == | == Useful Resources == | ||

* Socket activated processes (for the resource shell): http://0pointer.de/blog/projects/socket-activation.html | * Socket activated processes (for the resource shell): http://0pointer.de/blog/projects/socket-activation.html | ||

* PIM Sprint presentation: http://bddf.ca/~aseigo/akonadi_2015.odp | |||

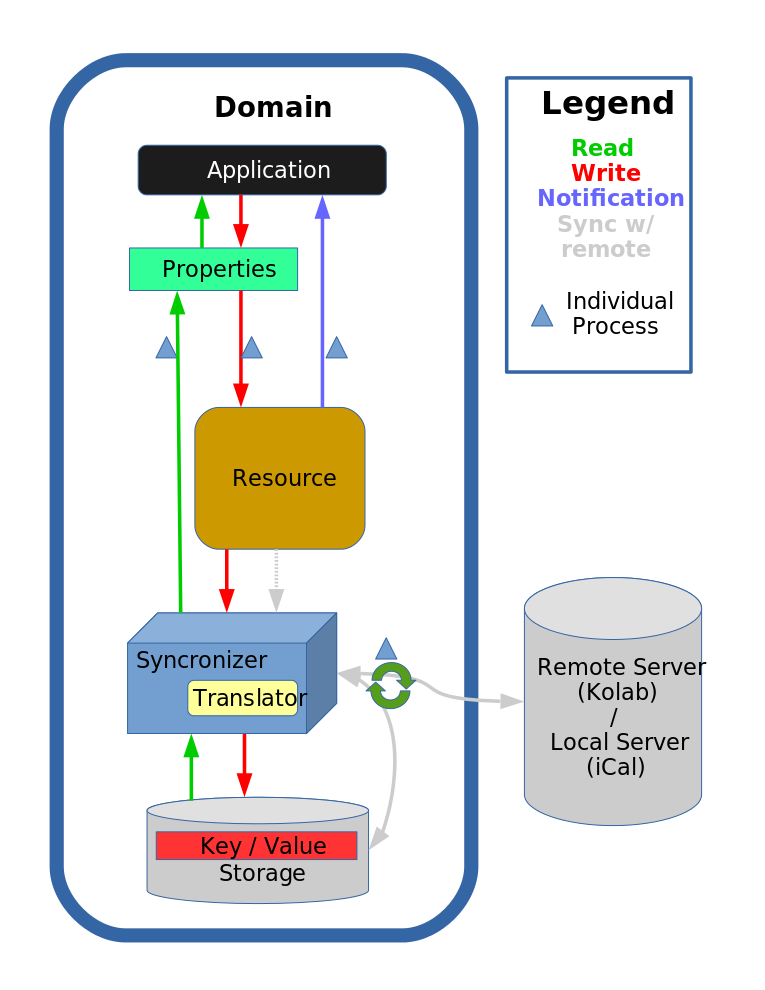

== Diagrams == | |||

Akonadi Next Workflow Draft | |||

[[Image:Akonadi_Next.svg|center|alt=Akonadi Next Workflow Diagram - Draft|Akonadi Next Workflow]] | |||

== Async Library == | |||

Motivation: | |||

Simplify writing of asynchronous code. | |||

=== Goals === | |||

* Provide a framework to build reusable asynchronous code fragments that are non-blocking. | |||

* The library should be easy to integrate in any existing codebase. | |||

* Abstract the type of asynchrony used: | |||

** Asynchronous operation could be executed in a thread | |||

** Asynchronous operation could be executed using continuations driven by the event loop | |||

** ... | |||

=== Existing Solutions === | |||

Existing solutions should be reused where possible. | |||

stdlib: | |||

* std::future: provides a future result | |||

* std::packaged_task: wraps callable (i.e. a lambda) for later execution, and returns a future for the result. | |||

* std::async: runs a callable asynchronously | |||

* std::thread: interface to create threads. Supports directly running a function in a thread. | |||

The problem with this library is that std::future only supports blocking waiting on the result and everything is built around that. | |||

Qt: | |||

* QtConcurrent: provides some functionality to run callables in threads and supports composition of such callables. | |||

** Supports only threads. | |||

KDE: | |||

* KJob: Can be subclassed to create a task for later execution. | |||

** No composition, no threading. | |||

* Threadweaver: | |||

** Supports only threads. | |||

=== Resources === | |||

* Proposal for std::future with continuations: http://www.open-std.org/jtc1/sc22/wg21/docs/papers/2013/n3721.pdf | |||

Latest revision as of 15:26, 23 October 2018

This is an experimental attempt at building a successor to Akonadi 1. The source code repository is currently on git.kde.org under scratch/aseigo/akonadinext

Current Discussion Points

- storage read API

- data scheme

Terminology

Consistent, agreed-upon terminology is key to being able to work together efficiently on a complex software project such as this, particularly as we are building on the earlier Akonadi efforts which itself has established terminology. You can find the current glossary on the Terminology page.

It is recommended to familiarize yourself with the terms before going further into the design of Akonadi-next as it will make things clearer for you and easier to ask the questions you have in a way that others will understand immediately.

Design

Todo: the design section should eventually be split up into the other sections.

Tradeoffs/Design Decisions

- Key-Value store instead of relational

- + Schemaless, easier to evolve

- - No need to fully normalize the data in order to make it queriable. And without full normalization SQL is not really useful and bad performance wise.

- - We need to maintain our own indexes

- Individual store per resource

- Storage format defined by resource individually

- - Each resource needs to define it's own schema

- + Resources can adjust storage format to map well on what it has to synchronize

- + Synchronization state can directly be embedded into messages

- + Individual resources could switch to another store technology

- + Easier maintenance

- + Resource is only responsible for it's own store and doesn't accidentaly break another resources store

- - Inter-resource moves are both more complicated and more expensive from a client perspective

- + Inter-resource moves become simple additions and removals from a resource perspective

- - No system-wide unique id per message (only resource/id tuple identifies a message uniquely)

- + Stores can work fully concurrently (also for writing)

- Storage format defined by resource individually

- Indexes defined and maintained by resources

- - Relational queries accross resources are expensive (depending on the query perhaps not even feasible)

- - Each resource needs to define it's own set of indexes

- + Flexible design as it allows to change indexes on a per resource level

- + Indexes can be optimized towards resources main usecases

- + Indexes can be shared with the source (IMAP serverside threading)

- Shared domain types as common interface for client applications

- - yet another abstraction layer that requires translation to other layers and maintenance

- + decoupling of domain logic from data access

- + allows to evolve types according to needs (not coupled to specific applicatoins domain types)

Risks

- key-value store does not perform with large amounts of data

- query performance is not sufficient

- turnaround time for modifications is too high to feel responsive

- design turns out similarly complex as akonadi1

Comments/Thoughts/Questions

Comments/Thoughts:

- Copying of domain objects may defeat mmapped buffers if individual properties get copied (all data is read from disk). We have to make sure only the pointer gets copied.

Open Questions:

- Resource-to-resource moves (e.g. expiring mail from an imap folder to a local folder)

- Should the "source" resource become a client to the other resource, and drive the process? This would allow greatest atomicity. It does imply being able to issue cross-resource move commands.

- If we use the mmapped buffers to avoid having a property level query API, lazy loading of properties becomes difficult. Currently we specify how much of each item we need using the parts, allowing the lazy loading to fetch missing parts. If we no longer specify what we want we cannot lazy load that part and at the point where the buffer is accessed it's already too late.

- How well can we free up memory with mmapped buffers?

- 1000 objects are loaded with minimal access (subject)

- A bunch of attachments are opened => we stream each file to tmp disk and open it.

- Can we unload the no-longer required attachments?

- => I suppose we'd have to munmap the buffer and mmap it again, replacing the pointer.

- This may mean we require a shared wrapper for the pointer so we can replace the pointer everywhere it's used.

- Datastreaming: It seems with the current message model it is not possible for the resource to stream large payload's directly to disk.

- As a possible solution a resource could decide to store large payloads externally, stream directly to disk, and then pass a message containing the file location to the system.

- How do we deal with mass insertions? => n inserted entities should not result in n update notifications with n updates.

- I suppose simple event compression should largely solve this issue.

Potential Features:

- Undo framework: Application sessions keep data around for long enough that we can rollback to earlier states of the store.

- Note that this would need to work accross stores.

- iTip handling in form of a filter? i.e. a kolab resource could install a filter that automatically dispatches iTip invitations based on the events stored.

Thoughts from Meeting (till, dvratil, ...) that need to be processed:

- Notification hints (what changed), to avoid potentially expensive updates for minor changes. (Only hints though, not authorative changeset, can be optimized for usecases).

- Resorting in kmail is a common operation

- New S/MIME implementation that doesn't support all of PGP would probably suffice

- DVD by email case should be considered

- Hierarchical configuration. Fallback to systemconfig.

- Reupload messages we failed to upload should be possible

- IMAP Idle => we cannot shutdown the resources

- Migration is important (especially pop3 archives, as they cannot redownload)

- Optional Akonadi would be nice (If you have a 100MB quota you may not have 50MB for akonadi).

- For pipelines: i.e. for mail filter we must guarantee that a message is never processed by the same filter twice.

- Do we need i.e. a shared Node/Collection basetype for hierarchies, so we can i.e. query the kolab foldertree containing calendars/notebooks/mailfolders.

Christians loose ends

Words that I'm using here and that we need to find a name for to add to the vocabulary.

- Domain Types = Types as defined by Akonadi2::Domain interface

- Resource Types = Types as defined by the resource

- Domain interface is possibly a shitty name for the interface with the domain types?

- not a 1:1 mapping between domain/resource types (tags stored as part of mails), needs to be taken into account in queries and various other places. Inside the resource (except facade) we always deal with resource types.

- Since a single modification can lead to multiple changes in storage, we need to wrap those into a transaction

- We're still doing a lot of copying when embedding one flatbuffer in another. I think this could be avoided by using the same builder.

- We should either use QByteArray or std::string for "raw" data. std::string is more standard, but QByteArray is what is traditionally used in qt code and has implicit sharing (which we may, or may not use).

- I would welcome a policy to only use QString if we actually use encoded strings. i.e. property names etc. could all become QByteArrays (or std::strings for that matter)

- we need a crashhandler for the synchronizer, ideally allowing to directly attach gdb (otherwise you have to jump through hoops)

- We need a logging framework (see also wiki for some requirements)

- The job API needs some work, I delegate some to dvratil (who wrote that code)

- the 4k pagesize is extremly expensive for indxes (where we have many but really small values)

- we need a streaming API for large values. We need to stream from the client to/from disk and from the synchronizer to/from disk (1GB attachment)

- The akonadi internal uid and revision should probably never be exposed to clients (unlike the akonadi item id in current akonadi). For many usecases the domain objects UID is what is interesting (which i.e. corresponds to the iCal event UID), and for when we actually need to address a specific record we should probably rather work with handles. This would keep people from doing stupid things like storing those non-stable identifiers (they will be different if we drop the cache and resync) in config files etc. If a solution is required for storable identifiers that reference a specific record rather than the domain object, we could provide a special "stable identifier" that we maintain in a separate database, and that we can invalidate once the record it points to no longer exists.

- The Akonadi2::Console should probably die and be replaced by the logging framework framework. It would then of course be cool to be able to start a "console" tool to monitor what the individual processes are doing in real-time.

- lmdb databases never shrink. queues should therefore be deleted from disk once empty. For indexes and mainstorage always growing should be ok for a start.

- Storage::write should have a version that takes an error handler, as usual

Useful Resources

- Socket activated processes (for the resource shell): http://0pointer.de/blog/projects/socket-activation.html

- PIM Sprint presentation: http://bddf.ca/~aseigo/akonadi_2015.odp

Diagrams

Akonadi Next Workflow Draft

Async Library

Motivation: Simplify writing of asynchronous code.

Goals

- Provide a framework to build reusable asynchronous code fragments that are non-blocking.

- The library should be easy to integrate in any existing codebase.

- Abstract the type of asynchrony used:

- Asynchronous operation could be executed in a thread

- Asynchronous operation could be executed using continuations driven by the event loop

- ...

Existing Solutions

Existing solutions should be reused where possible.

stdlib:

- std::future: provides a future result

- std::packaged_task: wraps callable (i.e. a lambda) for later execution, and returns a future for the result.

- std::async: runs a callable asynchronously

- std::thread: interface to create threads. Supports directly running a function in a thread.

The problem with this library is that std::future only supports blocking waiting on the result and everything is built around that.

Qt:

- QtConcurrent: provides some functionality to run callables in threads and supports composition of such callables.

- Supports only threads.

KDE:

- KJob: Can be subclassed to create a task for later execution.

- No composition, no threading.

- Threadweaver:

- Supports only threads.

Resources

- Proposal for std::future with continuations: http://www.open-std.org/jtc1/sc22/wg21/docs/papers/2013/n3721.pdf