GSoC/2021/StatusReports/NghiaDuong: Difference between revisions

Quochungtran (talk | contribs) |

|||

| (30 intermediate revisions by 3 users not shown) | |||

| Line 54: | Line 54: | ||

'''TODO''' | '''TODO''' | ||

* Try out the Keras-Facenet implementation of the Facenet model. | * Try out the Keras-Facenet implementation of the Facenet model. | ||

As mentioned in the section above, the first goal of this project is to select a suitable model for the face classifier of digiKam's faces engine. Currently, we are using the Openface pre-trained model as the neural network of the faces engine. Cropped-face images are passed through the neural network to output 128-dimension face representation vectors called face embeddings. After exporting the face embedding data extracted from digiKam's faces engine, we stored it in a csv formatted file for easier experimentation and to eliminate the overhead recomputation. With R scripting language, we can easily manipulate the data and explore some classical algorithms like [https://en.wikipedia.org/wiki/Linear_discriminant_analysis LDA], [https://en.wikipedia.org/wiki/Quadratic_classifier QDA] and [https://en.wikipedia.org/wiki/Support-vector_machine SVM]. | As mentioned in the section above, the first goal of this project is to select a suitable model for the face classifier of digiKam's faces engine. Currently, we are using the Openface pre-trained model as the neural network of the faces engine. Cropped-face images are passed through the neural network to output 128-dimension face representation vectors called face embeddings. After exporting the face embedding data extracted from digiKam's faces engine, we stored it in a csv formatted file for easier experimentation and to eliminate the overhead recomputation. With R scripting language, we can easily manipulate the data and explore some classical algorithms like [https://en.wikipedia.org/wiki/Linear_discriminant_analysis LDA], [https://en.wikipedia.org/wiki/Quadratic_classifier QDA] and [https://en.wikipedia.org/wiki/Support-vector_machine SVM]. | ||

| Line 62: | Line 61: | ||

[[File:PCA.png|thumb|900px|'''Figure 1: Principal component analysis on face embedding data'''|center]] | [[File:PCA.png|thumb|900px|'''Figure 1: Principal component analysis on face embedding data'''|center]] | ||

Here we can see, with PCA, 90% of the data variance can be explained with only 27 principal components. Therefore, we can select these 27 components as new predictors of our data set. After the experiment with LDA, QDA, and SVM, I find that the QDA and Gaussian-SVM give the best results. Therefore, we can find that the non-linear decision boundaries responses best to this problem. However, the best result is still | Here we can see, with PCA, 90% of the data variance can be explained with only 27 principal components. Therefore, we can select these 27 components as new predictors of our data set. After the experiment with LDA, QDA, and SVM, I find that the QDA and Gaussian-SVM give the best results. Therefore, we can find that the non-linear decision boundaries responses best to this problem. However, the best result is still not good enough (86% accuracy for classification without outlier and 74% accuracy for classification with outliers). Therefore, we need to find another way to boost the performance of the faces engine. | ||

===== June 22 to July 11 (Week 3 - 4 - 5) - Boost the accuracy of facial recognition to 98.54% with Facenet and T-SNE ===== | |||

'''DONE''' | |||

* Export facenet neural network to OpenCV. | |||

* Reconstruct the preprocessing pipeline for facenet in OpenCV. | |||

* Reduce recognition error by reducing data dimension with UMAP and T-SNE. | |||

* Export Multicore T-SNE to compile with digiKam code base. | |||

'''TODO''' | |||

* Integrate the new implementation with the main pipeline. | |||

* Improve batch processing and background processing in faces engine. | |||

It seems like we run into a bottleneck of facial recognition in our implementation of the faces engine. As debugged during the previous Google Summer of Code, the main reason for the recognition error was due to the noise in the face embedding output by the [https://cmusatyalab.github.io/openface/ OpenFace] model. | |||

[[File:UMAP projection of faces embedding from Extended Yale B dataset.png|thumb|center|600px|'''Figure 2: UMAP projection of faces embedding output by the OpenFace model ''']] | |||

As a suggestion of Thanh Trung Dinh, we could use [https://github.com/davidsandberg/facenet David Sandberg's Facenet Tensorflow implementation] as a replacement in our faces engine. During [https://community.kde.org/GSoC/2019/StatusReports/ThanhTrungDinh his 2019 Google Summer of Code], he wanted to use [https://github.com/davidsandberg/facenet David Sandberg's Facenet], which was implemented very close to the [https://arxiv.org/abs/1503.03832 Facenet paper], for digiKam's faces engine. However, at that time, the translation of the Tensorflow pretrained model to an OpenCV readable format was not available. That is the reason why we are using [https://cmusatyalab.github.io/openface/ OpenFace] as an alternative. Lately, with the help of [https://github.com/TanFluent/facenet_opencv_dnn TanFluent], we are now able to use Facenet with OpenCV. Therefore, now may be a good time to use the Facenet model in the faces engine. | |||

After a few tests in python, with the original tensorflow facenet model, the result on the Extended Yale B dataset is outstanding. The Facenet model outputs a 512-dimensional face embedding for each face image. We extracted the face embeddings from the Extended Yale B dataset and saved them in a csv file. After that, same as in the experiment with OpenFace, we project these high dimensional data on 2D plan, and here is the result: | |||

[[File:Umap facenet.png|thumb|center|600px|'''Figure 3: UMAP projection of faces embedding output by the Facenet model ''']] | |||

Compare to the same projection on the face embeddings output by the OpenFace model shown in Figure 2, we can see that there is a huge difference in the result. On the projected data given in Figure 3, we can easily classify different classes with human eyes. When applying the algorithms mentioned in the previous section on this data, we achieve the highest accuracy of 98.7% with KNN and 88% with Gaussian SVM on classification with outliers. With this outstanding result, we decided to use Facenet to replace Openface as the neural network of the faces engine. Plus, the output of Facenet is very high dimensional (512D) and we received a good result with UMAP projection, therefore, we also decided to add data projection in our faces engine. | |||

After reviewing the pipeline of Facenet, we are able to reproduce the preprocessing pipeline with OpenCV in C++. The result given is the same as the result from the original python codebase. The next step is to implement the UMAP data projection in C++ in order to use it in the faces engine. However, there isn't any version of UMAP available in native C++, most of them are C++ with python or R dependencies, which is not very suitable and difficult to export. Because of the limited time of the project, we decided to use an alternative data projection algorithm that is similar to UMAP - [https://lvdmaaten.github.io/tsne/ T-SNE], which is available in C++. Similar to UMAP, T-SNE is a technique for dimensionality reduction that is particularly well suited for the visualization of high-dimensional datasets. | |||

[[File:T-SNE .png|thumb|center|600px|'''Figure 4: T-SNE projection of faces embedding output by the Facenet model''']] | |||

As we can see in the figure above, the projections using T-SNE are not well clustered like the ones using UMAP. However, this result is good enough for us to perform classification. After being tested, the classification on T-SNE projected data gave us an accuracy of 98.54%, which is very good. Therefore, we decided to import the [https://github.com/DmitryUlyanov/Multicore-TSNE C++ Multicore T-SNE code] to the digiKam faces engine codebase. With the multicore C++ version, we achieve a better processing speed. | |||

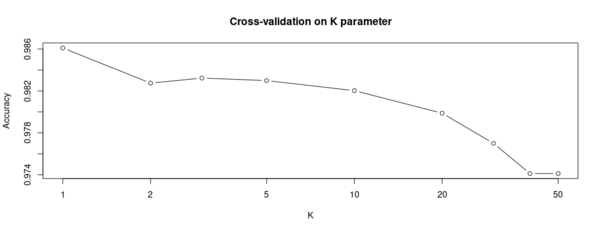

With this new improvement, we now can perform classification on 2D vector space. In this low dimensional space, K-Nearest Neighbors again become the best choice that supports both classification and outlier detection. In order to have the best result, we need to tune 2 hyper-parameters: the K number of neighbors taken into consideration when performing classification, and the threshold of searching range for the neighbors which is used for outlier elimination. It is best for user interest that we tune the K parameter using cross-validation and let them tune the threshold themself because the projected data might be variate base on user data. As we can find in figure 5 below, the best K for our algorithm is 1, which means we only need to consider the nearest point that is inside the threshold for label association. | |||

[[File:KNN.png|thumb|center|600px|'''Figure 5: Cross-validation on parameter K of KNN classification''']] | |||

=== Coding period : Phase tow (July 12 - August 23) === | |||

In the second phase of the project, my work mostly concentrated on integrated the experimented solutions mentioned above into the faces engine. The works include the integration of T-SNE into the C++ codebase of digiKam and the modification in the workflow of the face management pipelines in order to adapt to the new solution while maintaining the same level of performance. | |||

<br> | |||

===== July 12 - August 1 (Week 6 - 7) - Integration of multicore T-SNE and Facenet DNN into digiKam's faces engine ===== | |||

'''DONE''' | |||

* Exporting multicore T-SNE to perform on OpenCV Mat. | |||

* Evaluating the performance of T-SNE in C++. | |||

* Integration of new Facenet model into the Face Extractor of the faces engine. | |||

'''TODO''' | |||

* Adapt the workflow of the face management utility to support the new solution. | |||

After gaining a promising result after the experimentations with T-SNE data projection, I decided to adopt this solution for our faces engine. Luckily, the core of the T-SNE algorithm was implemented in C++. Furthermore, there is also a [https://github.com/DmitryUlyanov/Multicore-TSNE multi-core version] available. To integrate this solution to digiKam faces engine, I have adapted the code of T-SNE to be compatible with OpenCV Mat. The processing time of T-SNE is linear to the size of the data set. After a few tests, I recorded a processing time of 150s when operating on 12900 data points. This data projection process is repeated every time new data is added to the face embedding collection. Therefore, we need to find a way to tolerate the processing time. | |||

At first, I tried to use the sliding window approach to add new data to the projection. The idea behind this approach is to update the projection by composing new data with a small subset of existing data instead of the entire dataset. As new data is added, the projection of new data is calculated by the moving window of the most recent data. This approach trades the projection accuracy for processing speed. However, tests review that this approach does not reserve the global structure of data. The result of data projection by sliding window is impossible to be used for classification. | |||

With T-SNE we have no choice but to reprocess the entire data set every time we add new data. Therefore to we need to find a way to compensate for the processing time of data projection. | |||

===== August 2 - August 22 (Week 8 - 9 - 10) - Modify Face pipeline to promote asynchronous processing ===== | |||

'''DONE''' | |||

* Update face database to associate face matrices with their corresponding detected faces | |||

* Integrate face matrix extraction with face detection pipeline | |||

* Switch face processing workers into async mode | |||

With the current implementation of face pipelines, we recompute the face embeddings for every unrecognized face whenever we try to recognize them. With the addition of T-SNE data projection, the repeat of face embedding extraction wastes a lot of time. A simple way to avoid this repetition is to compute and save directly the face embedding right after the face is detected, for later usage. In order to make is this implementation utilizable, we need to be able to retrieve the corresponding face embedding to a detected face during the recognition process. | |||

To do so, I replace the "context" field, which contains the training context of the face embedding in the FaceMatrices table with the "tagID" field, which is a text representation of the detected face. The "tagID" field has to be unique. In digiKam, we save the detected face as a face tag. A tag is associated with an image and the limit boundary of the tag region. These are also the unique attributes to identify 2 different tags. By combining the image ID and the tag region, we have a unique "tagID" of detected faces. | |||

After the update of the Face database, we can integrate the face matrices extraction into the detection pipeline. In this pipeline, after the faces are detected from an image, the face tags are saved and passed to the Extraction Worker to be used for face embedding extraction. These face embeddings are saved with an unknown identity. By delegating face embedding extraction to the detection pipeline, we can avoid their recomputation in the recognition pipeline, and reserve the processing time for data projection. | |||

By adding face embedding extraction to the detection pipeline, we risk prolonging the processing there. Currently, the face pipelines are chained so that data can be passed sequentially from one worker to another. New data can only be processed when the old data is entirely passed through the pipeline. This synchronous processing mechanism waste unnecessary waiting time, and it blocks the main thread of the application. Therefore, I decided to switch the processing mechanism in face pipelines to asynchronous, which means data can be queued in a worker at one point and be processed at another time. This mechanism allows data can be added continuously without waiting for the pipeline to finished its processing. Each worker performs its tasks independently, which reduces the general processing time. With the help of the asynchronous pipeline, face detection and face embedding extraction can run independently without taking more time. | |||

Another point to be noticed is that the purpose of T-SNE data projection is to avoid the curse of dimensionality that causes inaccurate prediction when the dimension of data is large. However, this issue can be avoided in the case where the size of the data set is much larger than the data dimension. The augmentation in the data size also makes the T-SNE data projection process take longer to compute. Therefore we can reason that when the data set is large enough, we can omit the dimensional reduction process to save time. Currently, I chose this threshold to be 13000 data entries, which makes the data set 25 times larger than its dimension. Plus, it would take more than 3 minutes for T-SNE to process a data set that has more than 13000 entries. | |||

== Final result == | |||

As the final result, the current state of digiKam's faces engine can be summarized as follow. We have successfully integrated the Facenet DNN model into the face engines. This new update increases the accuracy of facial recognition to 98.54%, but it also increases 4 times the size of the face embedding to be stored. T-SNE data projection is integrated into the recognition process. It reduces the dimension of face embedding from 512 to 2, which improves accuracy and reduces processing time. In general, t-SNE takes 12ms/face and recognition takes less than 1ms/face. | |||

To summarize, this project increases the accuracy of the facial recognition module of the faces engine from 84% to 98%. About the recognition speed, there is a little bit of change in how the data size affecting the processing time. Before we only processed new faces to be recognized with the speed of 19ms/face, now we reprocess the entire data set with the speed of 13ms/face. Therefore, it would take a little bit longer than usual, even with very few new faces to recognize. To avoid taking too much time, currently, we limit the processing time for facial recognition to be at most 3 minutes. | |||

At the end of this project, the following bugs are supposed to be fixed: | |||

https://bugs.kde.org/show_bug.cgi?id=431797 | |||

https://bugs.kde.org/show_bug.cgi?id=432529 | |||

Latest revision as of 18:42, 10 June 2023

Digikam: Faces engine improvements

digiKam is a famous open-source photo management software. Face engine is a tool helping users recognize and label faces in photos. Following the advance of Deep Learning, digiKam development team has been working on the Deep Learning implementation of the Faces engine since 2018. During the past few years, with the huge effort of digiKam developers and the great support from users, the Faces engine has been improved gradually.

Last year, during the 2020 Google Summer of Codes, I had a chance to work on digiKam's faces engine, as a part of the DNN based Faces Recognition Improvements project. At the end of this project, we were able to finish the implementation of a machine-learning-based classification system for facial recognition. On top of that, we also remodeled the face database of digiKam which was specialized in face embedding storage. As the final result, we achieved an accuracy of 84% on facial recognition, with a processing speed of about 19 ms/face.

However, after receiving reviews and bug reports from users, we found out that there are a few remaining problems on the faces engine. Therefore the main goals of this project to pick up the previous work and focus on improving the accuracy of digiKam's faces engine.

Mentors : Gilles Caulier, Maik Qualmann, Thanh Trung Dinh

Important Links

Project Proposal

Digikam Faces engine improvements

GitLab development branch

Contacts

Email: [email protected]

Github: MinhNghiaD

Invent KDE: minhnghiaduong

LinkedIn: https://www.linkedin.com/in/nghia-duong-2b5bbb15a/

Project Goals

The current goals of this project are to :

- Improve the accuracy of faces classifier with outlier detection

- Improve the speed of facial recognition and detection, improve batch processing

- Improve the face management pipeline organization

Project Report

Community Bonding period (May 17 - June 7)

The current version of the face classifier of the faces engine support K-Nearest neighbors and Linear Support vector machine as algorithms. With K-Nearest neighbors, we select a certain number of closest data points that lie inside a threshold area to select the best label to match with the data. If there aren't any points that satisfy the threshold, the classifier will consider that it is an outlier. This algorithm performs quite well on a large dataset. However, when the training data is limited, which is mostly the case for digiKam users, K-Nearest neighbors do not perform well with high dimensional data, due to the curse of dimensionality. On the other hand, SVM suffers less from the curse with dimensionality, but it only creates boundaries for a known number of labels. When it comes to face the image of a new entity, the classifier will try to assign it to a known label which is considered by the user a wrong label.

Therefore, during the community bonding period, my main goal is to prepare for the selection of the best classification algorithm for the face embedding distribution from the OpenFace CNN that we are currently using in the faces engine. The selection criteria are accuracy on multi-class classification and accuracy on outlier detection. This means that not only the selected algorithm has to perform well when it comes to classification between known labels, but also to be able to guess if a face is already "known" or not.

For this phase, some machine learning analytical tools like R or Python are suitable for quick experimentation and statistical exploration. Therefore, we decided to perform model selection in R, using the extract data from the Extended Yale B dataset that contains over 12900 face images. For this process, we split the entire dataset into 3 subsets, a validation set contains 50% of the data for the final performance evaluation of the selected model, a training set that contains 40% of the data, and a test set that contains 10% of the data for cross-validation. To support classification and novelty detection, we have algorithms that output the posterior probability of the data point belong to a group, for example, Discriminant analytics and Logistic regression. Or, we can use a dedicated algorithm to filter out the outliers, like One-class SVM, and then perform the classification using another algorithm.

Coding period : Phase one (June 7 - July 12)

In this phase, my work mostly concentrated on testing and improving the accuracy and speed of the facial recognition module of the faces engine. The works include finding suitable classification algorithms, trying out other deep learning models for facial recognition if needed, and implement data processing to improve general performance.

June 7 to June 21 (Week 1 - 2) - Experimentation on face embedding data

DONE

- Exploring face embedding data.

- Evaluating the performance of different classification algorithms on this dataset.

- Implementing the suitable algorithm in C++.

- Evaluating the performance of the C++ implementation.

TODO

- Try out the Keras-Facenet implementation of the Facenet model.

As mentioned in the section above, the first goal of this project is to select a suitable model for the face classifier of digiKam's faces engine. Currently, we are using the Openface pre-trained model as the neural network of the faces engine. Cropped-face images are passed through the neural network to output 128-dimension face representation vectors called face embeddings. After exporting the face embedding data extracted from digiKam's faces engine, we stored it in a csv formatted file for easier experimentation and to eliminate the overhead recomputation. With R scripting language, we can easily manipulate the data and explore some classical algorithms like LDA, QDA and SVM.

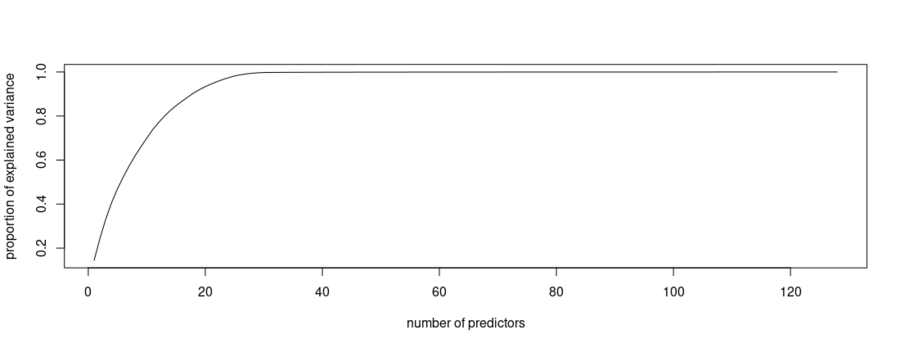

Firstly, we can see that the data is high-dimensional (128 predictors and 1 label to explain). Therefore, we need to reduce the high dimensionality of the problem before applying machine learning algorithms. A simple dimensional reduction method is Principal component analysis (PCA), which computes the eigen vectors (data orientation) and eigen values (projection of data on new vectorial space). Other more complex dimensional reduction techniques like UMAP and T-SNE will be explored in more detail in the next sections.

Here we can see, with PCA, 90% of the data variance can be explained with only 27 principal components. Therefore, we can select these 27 components as new predictors of our data set. After the experiment with LDA, QDA, and SVM, I find that the QDA and Gaussian-SVM give the best results. Therefore, we can find that the non-linear decision boundaries responses best to this problem. However, the best result is still not good enough (86% accuracy for classification without outlier and 74% accuracy for classification with outliers). Therefore, we need to find another way to boost the performance of the faces engine.

June 22 to July 11 (Week 3 - 4 - 5) - Boost the accuracy of facial recognition to 98.54% with Facenet and T-SNE

DONE

- Export facenet neural network to OpenCV.

- Reconstruct the preprocessing pipeline for facenet in OpenCV.

- Reduce recognition error by reducing data dimension with UMAP and T-SNE.

- Export Multicore T-SNE to compile with digiKam code base.

TODO

- Integrate the new implementation with the main pipeline.

- Improve batch processing and background processing in faces engine.

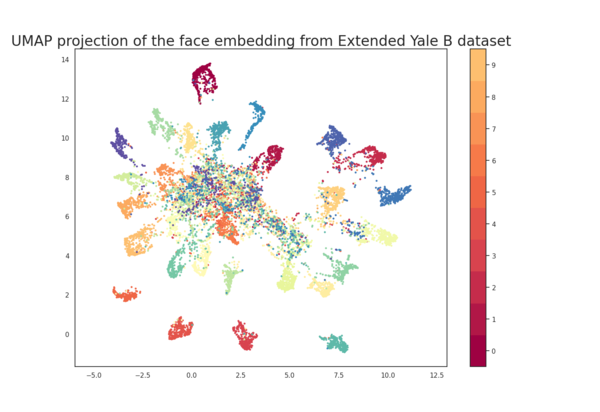

It seems like we run into a bottleneck of facial recognition in our implementation of the faces engine. As debugged during the previous Google Summer of Code, the main reason for the recognition error was due to the noise in the face embedding output by the OpenFace model.

As a suggestion of Thanh Trung Dinh, we could use David Sandberg's Facenet Tensorflow implementation as a replacement in our faces engine. During his 2019 Google Summer of Code, he wanted to use David Sandberg's Facenet, which was implemented very close to the Facenet paper, for digiKam's faces engine. However, at that time, the translation of the Tensorflow pretrained model to an OpenCV readable format was not available. That is the reason why we are using OpenFace as an alternative. Lately, with the help of TanFluent, we are now able to use Facenet with OpenCV. Therefore, now may be a good time to use the Facenet model in the faces engine.

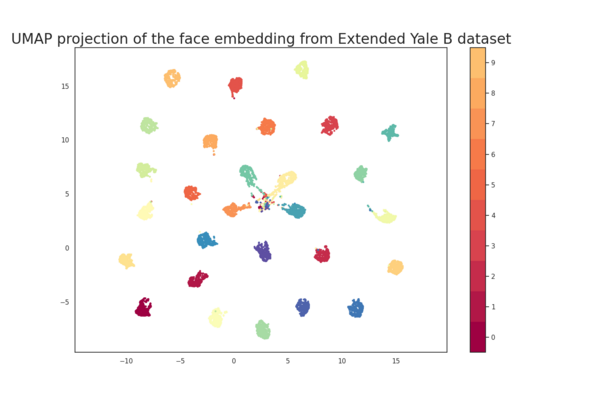

After a few tests in python, with the original tensorflow facenet model, the result on the Extended Yale B dataset is outstanding. The Facenet model outputs a 512-dimensional face embedding for each face image. We extracted the face embeddings from the Extended Yale B dataset and saved them in a csv file. After that, same as in the experiment with OpenFace, we project these high dimensional data on 2D plan, and here is the result:

Compare to the same projection on the face embeddings output by the OpenFace model shown in Figure 2, we can see that there is a huge difference in the result. On the projected data given in Figure 3, we can easily classify different classes with human eyes. When applying the algorithms mentioned in the previous section on this data, we achieve the highest accuracy of 98.7% with KNN and 88% with Gaussian SVM on classification with outliers. With this outstanding result, we decided to use Facenet to replace Openface as the neural network of the faces engine. Plus, the output of Facenet is very high dimensional (512D) and we received a good result with UMAP projection, therefore, we also decided to add data projection in our faces engine.

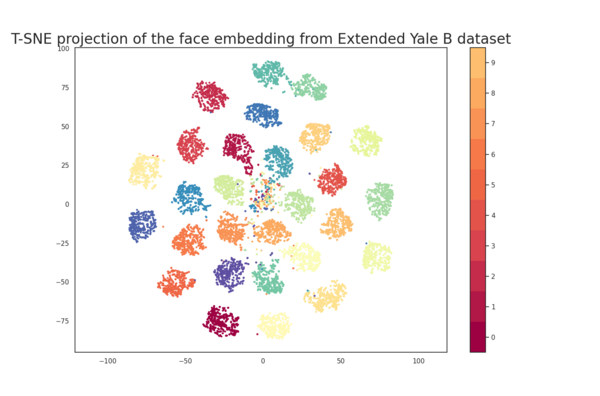

After reviewing the pipeline of Facenet, we are able to reproduce the preprocessing pipeline with OpenCV in C++. The result given is the same as the result from the original python codebase. The next step is to implement the UMAP data projection in C++ in order to use it in the faces engine. However, there isn't any version of UMAP available in native C++, most of them are C++ with python or R dependencies, which is not very suitable and difficult to export. Because of the limited time of the project, we decided to use an alternative data projection algorithm that is similar to UMAP - T-SNE, which is available in C++. Similar to UMAP, T-SNE is a technique for dimensionality reduction that is particularly well suited for the visualization of high-dimensional datasets.

As we can see in the figure above, the projections using T-SNE are not well clustered like the ones using UMAP. However, this result is good enough for us to perform classification. After being tested, the classification on T-SNE projected data gave us an accuracy of 98.54%, which is very good. Therefore, we decided to import the C++ Multicore T-SNE code to the digiKam faces engine codebase. With the multicore C++ version, we achieve a better processing speed.

With this new improvement, we now can perform classification on 2D vector space. In this low dimensional space, K-Nearest Neighbors again become the best choice that supports both classification and outlier detection. In order to have the best result, we need to tune 2 hyper-parameters: the K number of neighbors taken into consideration when performing classification, and the threshold of searching range for the neighbors which is used for outlier elimination. It is best for user interest that we tune the K parameter using cross-validation and let them tune the threshold themself because the projected data might be variate base on user data. As we can find in figure 5 below, the best K for our algorithm is 1, which means we only need to consider the nearest point that is inside the threshold for label association.

Coding period : Phase tow (July 12 - August 23)

In the second phase of the project, my work mostly concentrated on integrated the experimented solutions mentioned above into the faces engine. The works include the integration of T-SNE into the C++ codebase of digiKam and the modification in the workflow of the face management pipelines in order to adapt to the new solution while maintaining the same level of performance.

July 12 - August 1 (Week 6 - 7) - Integration of multicore T-SNE and Facenet DNN into digiKam's faces engine

DONE

- Exporting multicore T-SNE to perform on OpenCV Mat.

- Evaluating the performance of T-SNE in C++.

- Integration of new Facenet model into the Face Extractor of the faces engine.

TODO

- Adapt the workflow of the face management utility to support the new solution.

After gaining a promising result after the experimentations with T-SNE data projection, I decided to adopt this solution for our faces engine. Luckily, the core of the T-SNE algorithm was implemented in C++. Furthermore, there is also a multi-core version available. To integrate this solution to digiKam faces engine, I have adapted the code of T-SNE to be compatible with OpenCV Mat. The processing time of T-SNE is linear to the size of the data set. After a few tests, I recorded a processing time of 150s when operating on 12900 data points. This data projection process is repeated every time new data is added to the face embedding collection. Therefore, we need to find a way to tolerate the processing time.

At first, I tried to use the sliding window approach to add new data to the projection. The idea behind this approach is to update the projection by composing new data with a small subset of existing data instead of the entire dataset. As new data is added, the projection of new data is calculated by the moving window of the most recent data. This approach trades the projection accuracy for processing speed. However, tests review that this approach does not reserve the global structure of data. The result of data projection by sliding window is impossible to be used for classification.

With T-SNE we have no choice but to reprocess the entire data set every time we add new data. Therefore to we need to find a way to compensate for the processing time of data projection.

August 2 - August 22 (Week 8 - 9 - 10) - Modify Face pipeline to promote asynchronous processing

DONE

- Update face database to associate face matrices with their corresponding detected faces

- Integrate face matrix extraction with face detection pipeline

- Switch face processing workers into async mode

With the current implementation of face pipelines, we recompute the face embeddings for every unrecognized face whenever we try to recognize them. With the addition of T-SNE data projection, the repeat of face embedding extraction wastes a lot of time. A simple way to avoid this repetition is to compute and save directly the face embedding right after the face is detected, for later usage. In order to make is this implementation utilizable, we need to be able to retrieve the corresponding face embedding to a detected face during the recognition process. To do so, I replace the "context" field, which contains the training context of the face embedding in the FaceMatrices table with the "tagID" field, which is a text representation of the detected face. The "tagID" field has to be unique. In digiKam, we save the detected face as a face tag. A tag is associated with an image and the limit boundary of the tag region. These are also the unique attributes to identify 2 different tags. By combining the image ID and the tag region, we have a unique "tagID" of detected faces.

After the update of the Face database, we can integrate the face matrices extraction into the detection pipeline. In this pipeline, after the faces are detected from an image, the face tags are saved and passed to the Extraction Worker to be used for face embedding extraction. These face embeddings are saved with an unknown identity. By delegating face embedding extraction to the detection pipeline, we can avoid their recomputation in the recognition pipeline, and reserve the processing time for data projection.

By adding face embedding extraction to the detection pipeline, we risk prolonging the processing there. Currently, the face pipelines are chained so that data can be passed sequentially from one worker to another. New data can only be processed when the old data is entirely passed through the pipeline. This synchronous processing mechanism waste unnecessary waiting time, and it blocks the main thread of the application. Therefore, I decided to switch the processing mechanism in face pipelines to asynchronous, which means data can be queued in a worker at one point and be processed at another time. This mechanism allows data can be added continuously without waiting for the pipeline to finished its processing. Each worker performs its tasks independently, which reduces the general processing time. With the help of the asynchronous pipeline, face detection and face embedding extraction can run independently without taking more time.

Another point to be noticed is that the purpose of T-SNE data projection is to avoid the curse of dimensionality that causes inaccurate prediction when the dimension of data is large. However, this issue can be avoided in the case where the size of the data set is much larger than the data dimension. The augmentation in the data size also makes the T-SNE data projection process take longer to compute. Therefore we can reason that when the data set is large enough, we can omit the dimensional reduction process to save time. Currently, I chose this threshold to be 13000 data entries, which makes the data set 25 times larger than its dimension. Plus, it would take more than 3 minutes for T-SNE to process a data set that has more than 13000 entries.

Final result

As the final result, the current state of digiKam's faces engine can be summarized as follow. We have successfully integrated the Facenet DNN model into the face engines. This new update increases the accuracy of facial recognition to 98.54%, but it also increases 4 times the size of the face embedding to be stored. T-SNE data projection is integrated into the recognition process. It reduces the dimension of face embedding from 512 to 2, which improves accuracy and reduces processing time. In general, t-SNE takes 12ms/face and recognition takes less than 1ms/face.

To summarize, this project increases the accuracy of the facial recognition module of the faces engine from 84% to 98%. About the recognition speed, there is a little bit of change in how the data size affecting the processing time. Before we only processed new faces to be recognized with the speed of 19ms/face, now we reprocess the entire data set with the speed of 13ms/face. Therefore, it would take a little bit longer than usual, even with very few new faces to recognize. To avoid taking too much time, currently, we limit the processing time for facial recognition to be at most 3 minutes.

At the end of this project, the following bugs are supposed to be fixed:

https://bugs.kde.org/show_bug.cgi?id=431797 https://bugs.kde.org/show_bug.cgi?id=432529